3 Analysing Data

Let’s again begin by loading the package.

# Load the package, and set a global random-number seed

# for the reproducible generation of fake data later on.

library(pavo)

set.seed(1612217)3.1 Analysing Spectral Data

3.1.1 Spectral Dataset Description

The raw spectral data used in this example are available from the package repository on github, located here. You can download and extract it to follow the vignette exactly. Alternatively, the data are included as an RData file as part of the package installation, and so can be loaded directly (see below).

The data consist of reflectance spectra, obtained using Avantes equipment and software, from seven bird species: Northern Cardinal Cardinalis cardinalis, Wattled Jacana Jacana jacana, Baltimore Oriole Icterus galbula, Peach-fronted Parakeet Aratinga aurea, American Robin Turdus migratorius, and Sayaca Tanager Thraupis sayaca. Several individuals were measured (sample size varies by species), and 3 spectra were collected from each individual. However, the number of individuals measured per species is uneven and the data have additional peculiarities that should emphasize the flexibility pavo offers, as we’ll see below.

In addition, pavo includes three datasets that can be called with the data function. data(teal), data(sicalis), and data(flowers) will all be used in this vignette. See the help files for each dataset for more information; via ?teal, ?sicalis, and ?flowers.

specs <- readRDS(system.file("extdata/specsdata.rds",

package = "pavo"

))

mspecs <- aggspec(specs, by = 3, FUN = mean)

spp <- gsub("\\.[0-9].*$", "", names(mspecs))[-1]

sppspec <- aggspec(mspecs, by = spp, FUN = mean)

spec.sm <- procspec(sppspec, opt = "smooth", span = 0.2)#> processing options applied:

#> smoothing spectra with a span of 0.23.1.2 Overview

pavo offers two main approaches for spectral data analysis. First, colour variables can be calculated based on the shape of the reflectance spectra. By using special R classes for spectra data frame objects, this can easily be done using the summary function with an rspec object (see below). The function peakshape() also returns descriptors for individual peaks in spectral curves, as outlined below.

Second, reflectance spectra can be analysed by accounting for the visual system receiving the colour signal, therefore representing reflectance spectra as perceived colours. To that end, we have implemented a suite of visual models and colourspaces including; the receptor-noise limited model of model of Vorobyev et al. (1998), Endler (1990)’s segment classification method, flexible di- tri- and tetra-chromatic colourspaces, the Hexagon model of Chittka (1992), the colour-opponent coding space of Backhaus (1991), CIELAB and CIEXYZ spaces, and the categorical model of Troje (1993).

3.1.3 Spectral Shape Analysis

3.1.3.1 Colourimetric Variables

Obtaining colourimetric variables (pertaining to hue, saturation and brightness/value) is pretty straightforward in pavo. Since reflectance spectra is stored in an object of class rspec, the summary function recognizes the object as such and extracts 23 variables, as outlined in Montgomerie (2006) (and reproduced here). Though outlined in a book chapter on bird colouration, these variables are broadly applicable to any reflectance data, particularly if the taxon of interest has colour vision within the UV-human visible range.

The description and formulas for these variables can be found by running ?summary.rspec.

summary(spec.sm)#> B1 B2 B3 S1U S1V

#> cardinal 8984.266 22.40465 52.70167 0.16614848 0.19110215

#> jacana 9668.503 24.11098 53.78744 0.09601072 0.11022142

#> oriole 9108.474 22.71440 54.15508 0.07484472 0.08323604

#> parakeet 6020.733 15.01430 29.86504 0.16792815 0.18501247

#> robin 5741.392 14.31769 37.85542 0.08503645 0.10014331

#> tanager 8515.251 21.23504 30.48108 0.20322408 0.23904955

#> S1B S1G S1Y S1R S2

#> cardinal 0.11783711 0.1898843 0.2519174 0.5333116 7.607769

#> jacana 0.19494363 0.3079239 0.2482692 0.4083129 7.101897

#> oriole 0.05728179 0.3254691 0.3624909 0.5492239 14.507969

#> parakeet 0.14407795 0.4244676 0.3395961 0.2718894 5.111074

#> robin 0.14428130 0.2673730 0.2668138 0.5100635 9.130156

#> tanager 0.26133545 0.3198724 0.2430666 0.2238857 3.226417

#> S3 S4 S5 S6 S7 S8

#> cardinal 0.2954315 0.20287401 4003.465 45.77432 -0.2055325 2.043072

#> jacana 0.2471677 0.05315822 3314.024 46.21377 -0.3054756 1.916710

#> oriole 0.2986678 0.08730015 5233.013 50.42230 -0.5847404 2.219838

#> parakeet 0.4483808 0.27397999 1894.018 24.02183 0.6656480 1.599931

#> robin 0.3031501 NA 2532.414 33.70923 -0.1076722 2.354377

#> tanager 0.3343114 0.16775097 1106.471 21.03373 -0.7851970 0.990520

#> S9 S10 H1 H2 H3 H4 H5

#> cardinal 0.83791691 0.4144862 700 419 587 0.02130606 581

#> jacana 0.73309578 0.1018889 700 593 529 0.71519543 468

#> oriole 0.92483219 0.1937922 700 382 551 0.41436251 544

#> parakeet 0.58642273 0.4383490 572 618 634 0.97835569 506

#> robin 0.81473611 NA 700 NA 593 0.42040115 631

#> tanager -0.04551277 0.1661607 557 594 362 1.52666571 518summary also takes an additional argument subset which if changed from the default FALSE to TRUE will return only the most commonly used colourimetrics (Andersson and Prager 2006). summary can also take a vector of colour variable names, which can be used to filter the results

summary(spec.sm, subset = TRUE)#> B2 S8 H1

#> cardinal 22.40465 2.043072 700

#> jacana 24.11098 1.916710 700

#> oriole 22.71440 2.219838 700

#> parakeet 15.01430 1.599931 572

#> robin 14.31769 2.354377 700

#> tanager 21.23504 0.990520 557#> B1 B2 B3

#> cardinal 8984.266 22.40465 52.70167

#> jacana 9668.503 24.11098 53.78744

#> oriole 9108.474 22.71440 54.15508

#> parakeet 6020.733 15.01430 29.86504

#> robin 5741.392 14.31769 37.85542

#> tanager 8515.251 21.23504 30.481083.1.3.2 Peak Shape Descriptors

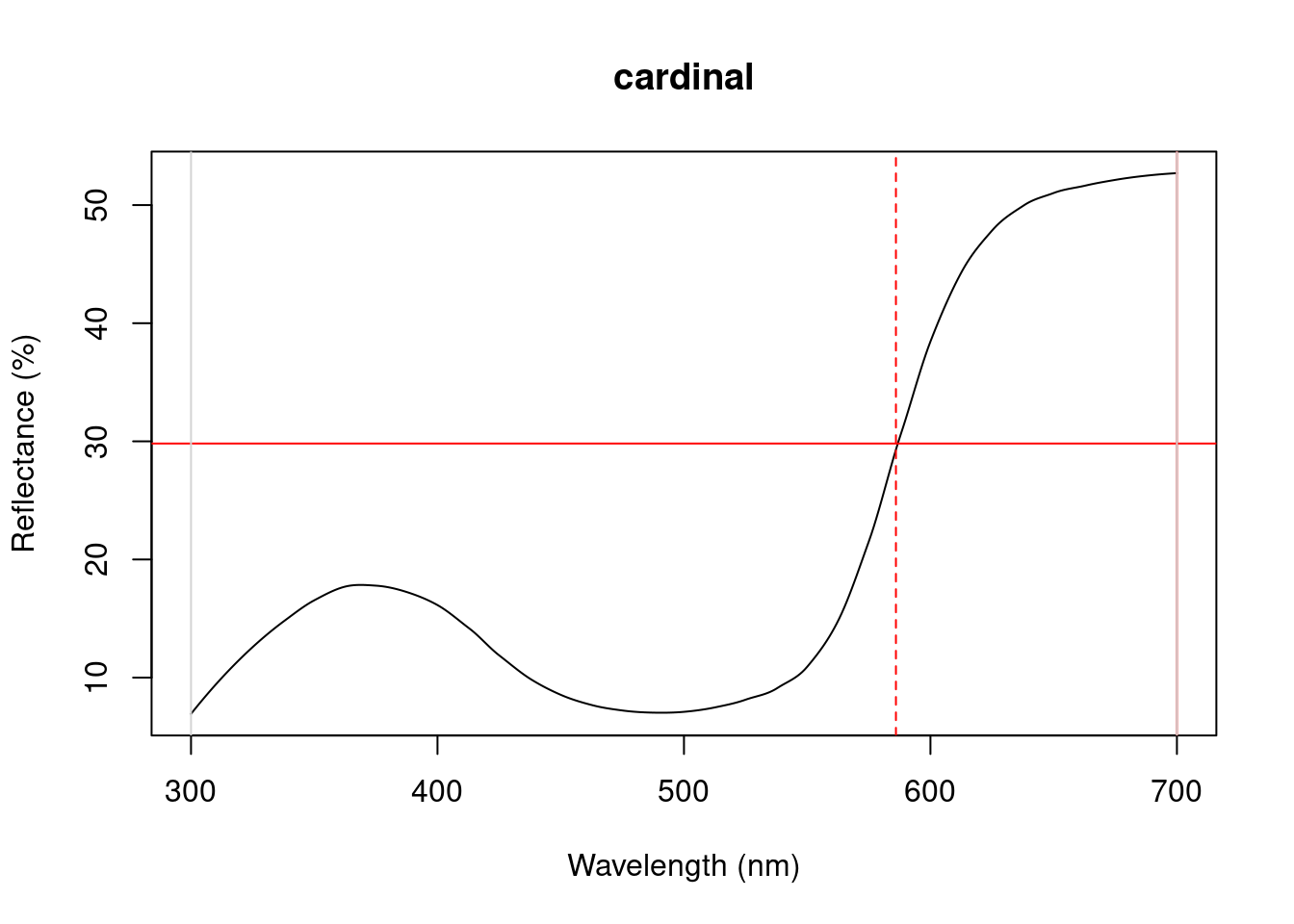

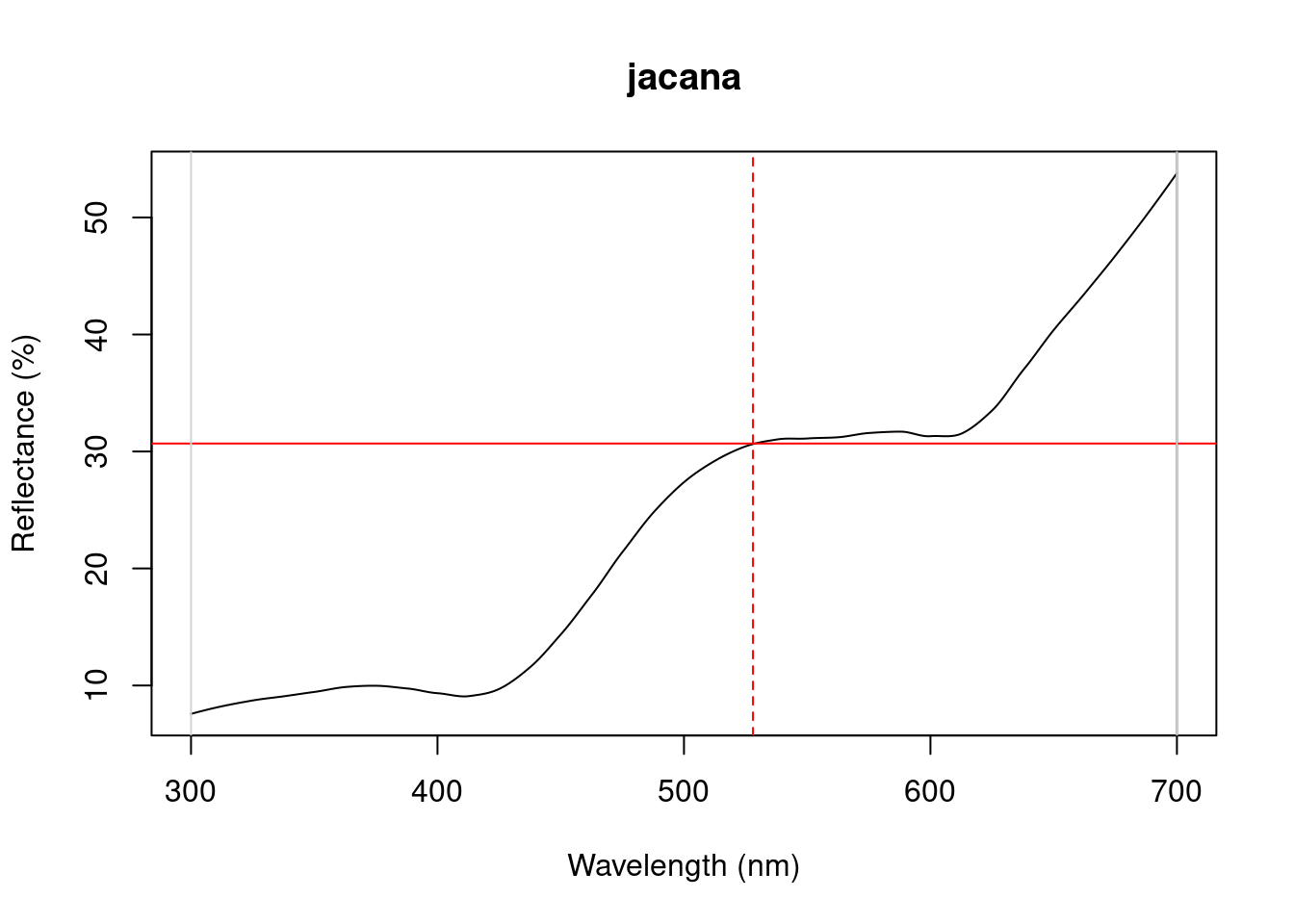

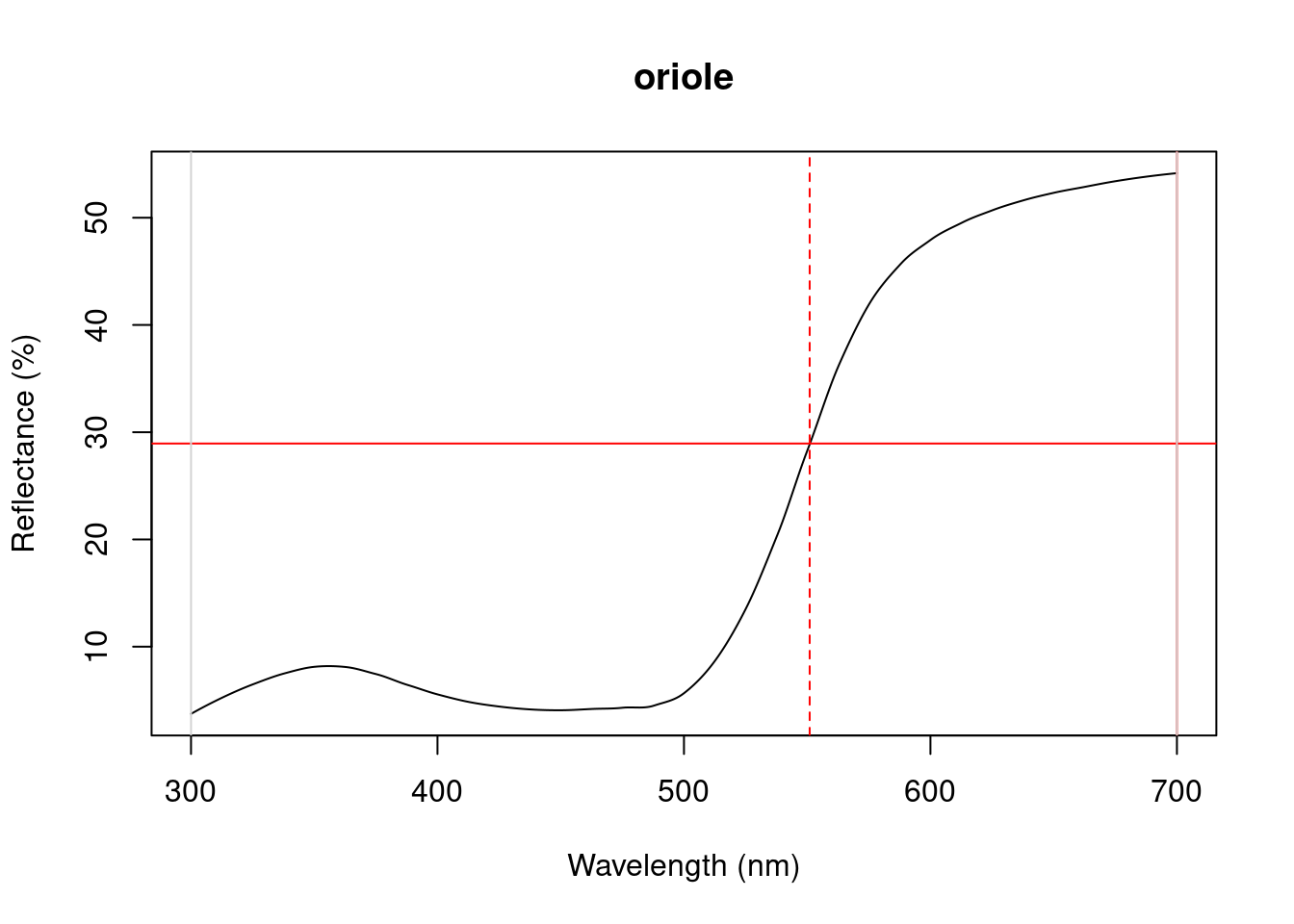

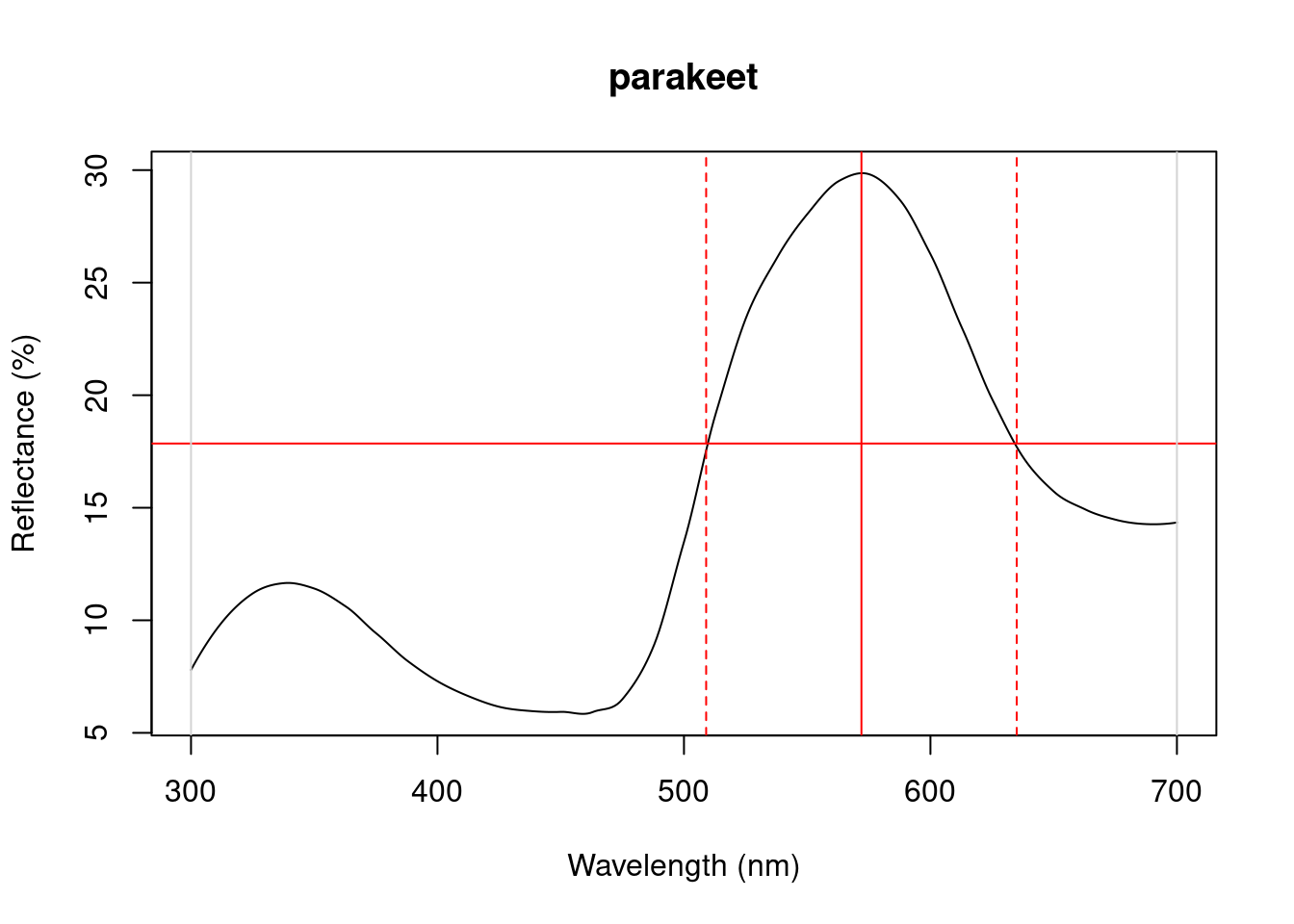

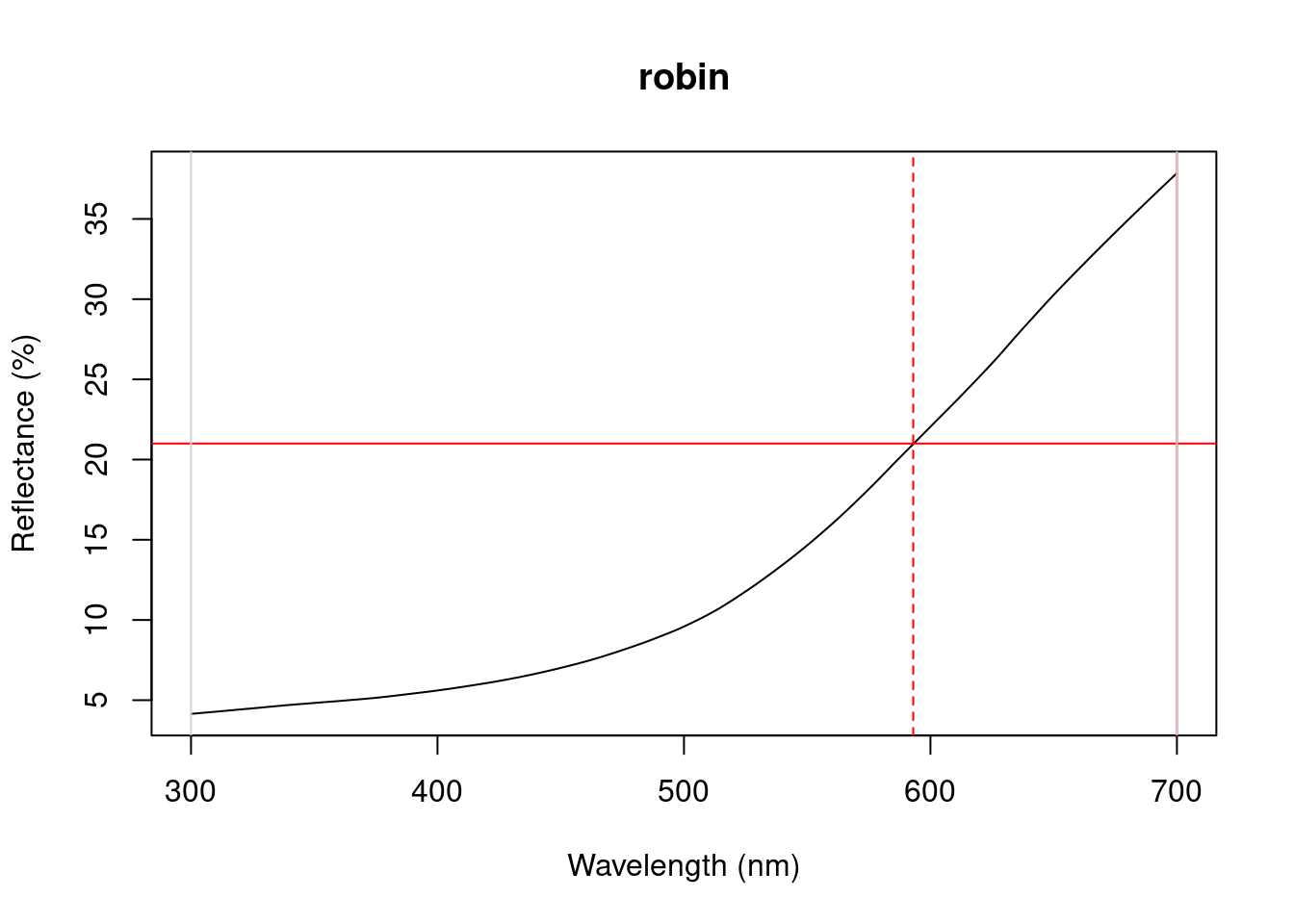

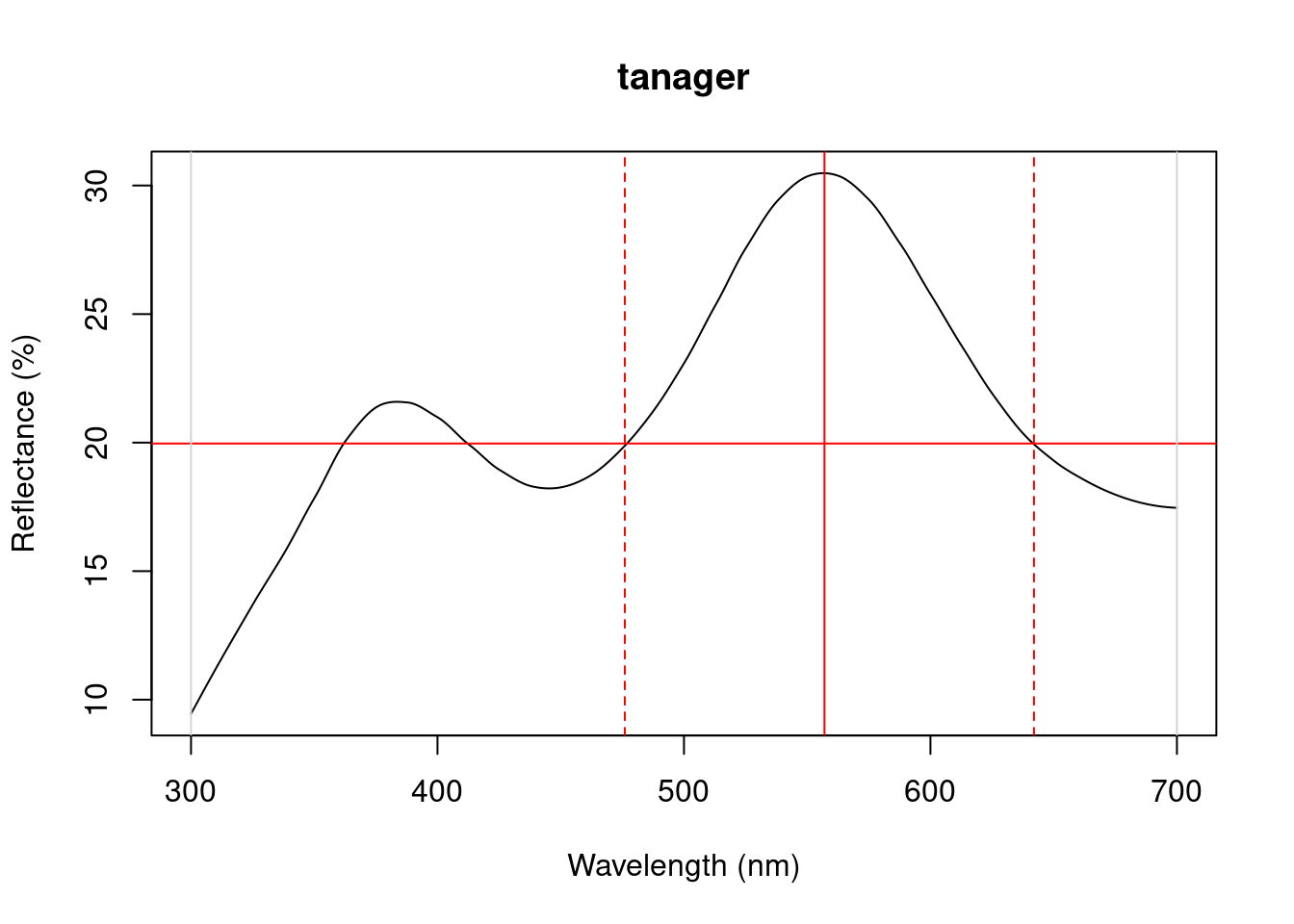

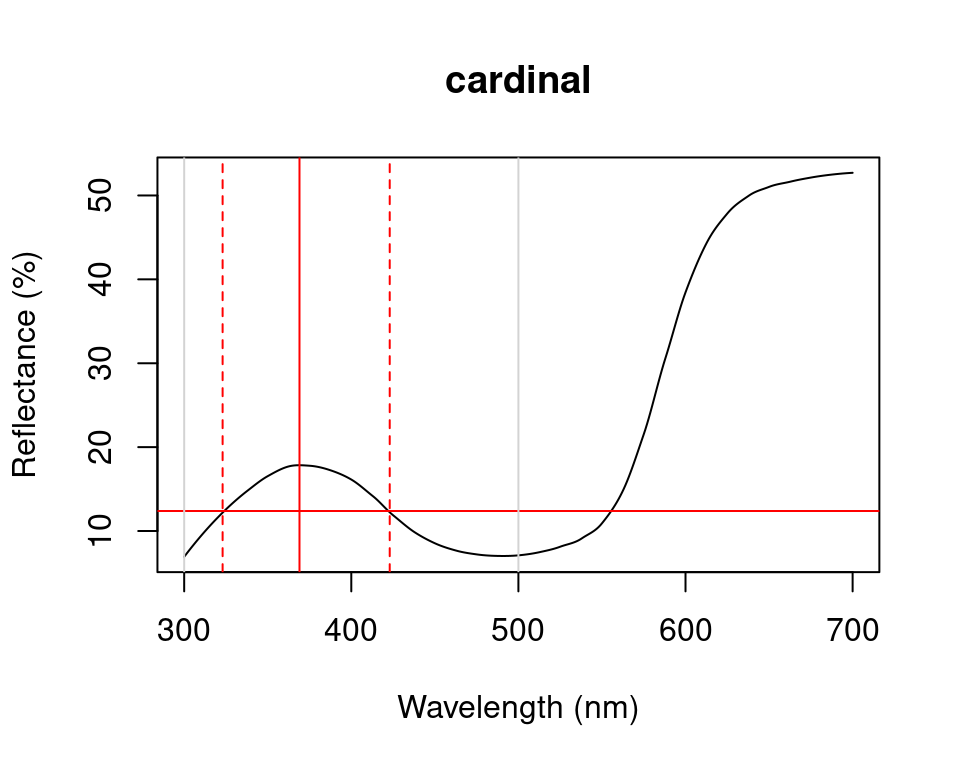

Particularly in cases of reflectance spectra that have multiple discrete peaks (in which case the summary function will only return variables based on the tallest peak in the curve), it might be useful to obtain variables that describe individual peak’s properties. The peakshape() function identifies the peak location (H1), returns the reflectance at that point (B3), and identifies the wavelengths at which the reflectance is half that at the peak, calculating the wavelength bandwidth of that interval (the Full Width at Half Maximum, or FWHM). The function also returns the half widths, which are useful when the peaks are located near the edge of the measurement limit and half maximum reflectance can only be reliably estimated from one of its sides.

If this all sounds too esoteric, fear not: peakshape() has the option of returning plots indicating what it’s calculating. The vertical continuous red line indicates the peak location, the horizontal continuous red line indicates the half-maximum reflectance, and the distance between the dashed lines (HWHM.l and HWHM.r) is the FWHM:

peakshape(spec.sm, plot = TRUE)#> id B3 H1 FWHM HWHM.l HWHM.r incl.min

#> 1 cardinal 52.70167 700 NA 114 NA Yes

#> 2 jacana 53.78744 700 NA 172 NA Yes

#> 3 oriole 54.15508 700 NA 149 NA Yes

#> 4 parakeet 29.86504 572 126 63 63 Yes

#> 5 robin 37.85542 700 NA 107 NA Yes

#> 6 tanager 30.48108 557 166 81 85 Yes

Figure 3.1: Plots from peakshape

As it can be seen, the variable FWHM is meaningless if the curve doesn’t have a clear peak. Sometimes, such as in the case of the Cardinal (Above figure, first panel), there might be a peak which is not the point of maximum reflectance of the entire spectral curve. peakshape() also offers a select argument to facilitate subsetting the spectra data frame to, for example, focus on a single reflectance peak:

Figure 3.2: Plot from peakshape, setting the wavelength limits to 300 and 500 nm

#> id B3 H1 FWHM HWHM.l HWHM.r incl.min

#> 1 cardinal 17.84381 369 100 46 54 Yes3.1.4 Visual Modelling

3.1.4.1 Overview

All visual and colourspace modelling and plotting options are wrapped into four main function: vismodel(), colspace(), coldist() (or its bootstrap-based variant bootcoldist()), and plot. As detailed below, these functions cover the basic processes common to most modelling approaches. Namely, the estimation of quantum catches, their conversion to an appropriate space, estimating the distances between samples, and visualising the results. As a brief example, a typical workflow for modelling might use:

-

vismodel()to estimate photoreceptor quantum catches. The assumptions of visual models may differ dramatically, so be sure to select options that are appropriate for your intended use. -

colspace()to convert the results ofvismodel()(or user-provided quantum catches) into points in a given colourspace, specified with thespaceargument. If nospaceargument is provided,colspace()will automatically select the di-, tri- or tetrahedral colourspace, depending on the nature of the input data. The result ofcolspace()will be an object of classcolspace(that inherits fromdata.frame), and will contain the location of stimuli in the selected space along with any associated colour variables. -

coldist()orbootcoldist()to estimate the colour distances between points in a colourspace (if the input is fromcolspace), or the ‘perceptual’, noise-weighted distances in the receptor-noise limited model (if the input is fromvismodel()whererelative = FALSE). -

plotthe output.plotwill automatically select the appropriate visualisation based on the inputcolspaceobject, and will also accept various graphical parameters depending on the colourspace (see?plot.colspaceand links therein for details).

3.1.4.2 Accessing and Plotting In-built Spectral Data

All in-built spectral data in pavo can be easily retrieved and/or plotted via the sensdata() function. These data can be visualised directly by including plot = TRUE, or assigned to an object for subsequent use, as in:

#> wl musca.u musca.s musca.m musca.l md.r1

#> 1 300 0.01148992 0.006726680 0.001533875 0.001524705 0.001713692

#> 2 301 0.01152860 0.006749516 0.001535080 0.001534918 0.001731120

#> 3 302 0.01156790 0.006773283 0.001536451 0.001545578 0.001751792

#> 4 303 0.01160839 0.006798036 0.001537982 0.001556695 0.001775960

#> 5 304 0.01165060 0.006823827 0.001539667 0.001568283 0.001803871

#> 6 305 0.01169510 0.006850712 0.001541499 0.001580352 0.0018357773.1.4.3 Visual Phenotypes

pavo contains numerous di-, tri- and tetrachromatic visual systems, to accompany the suite of new models. The full complement of included systems are accessible via the vismodel() argument visual, and include:

| phenotype | description |

|---|---|

| avg.uv | average ultraviolet-sensitive avian (tetrachromat) |

| avg.v | average violet-sensitive avian (tetrachromat) |

| bluetit | The blue tit Cyanistes caeruleus (tetrachromat) |

| star | The starling Sturnus vulgaris (tetrachromat) |

| pfowl | The peafowl Pavo cristatus (tetrachromat) |

| apis | The honeybee Apis mellifera (trichromat) |

| ctenophorus | The ornate dragon lizard Ctenophorus ornatus (trichromat) |

| canis | The canid Canis familiaris (dichromat) |

| musca | The housefly Musca domestica (tetrachromat) |

| cie2 | 2-degree colour matching functions for CIE models of human colour vision (trichromat) |

| cie10 | 10-degree colour matching functions for CIE models of human colour vision (trichromat) |

| segment | A generic ‘viewer’ with broad sensitivities for use in the segment analysis of Endler (1990) (tetrachromat) |

| habronattus | The jumping spider Habronattus pyrrithrix (trichromat) |

| rhinecanthus | The triggerfish Rhinecanthus aculeatus (trichromat) |

| drosophila | The vinegar fly Drosophila melanogaster |

3.1.4.4 Estimating Quantum Catch

Numerous models have been developed to understand how colours are perceived and discriminated by an individual’s or species’ visual system (described in detail in Endler 1990; Renoult, Kelber, and Schaefer 2017; Vorobyev et al. 1998). In essence, these models take into account the receptor sensitivity of the different receptors that make the visual system in question and quantify how a given colour would stimulate those receptors individually, and their combined effect on the perception of colour. These models also have an important component of assuming and interpreting the chromatic component of colour (hue and saturation) to be processed independently of the achromatic (brightness, or luminance) component. This provides a flexible framework allowing for a tiered model construction, in which information on aspects such as different illumination sources, backgrounds, and visual systems can be considered and compared.

To apply any such model, we first need to quantify receptor excitation and then consider how the signal is being processed, while possibly considering the relative density of different cones and the noise-to-signal ratio.

To quantify the stimulation of receptors by a given stimulus, we will use the function vismodel(). This takes an rspec dataframe as a minimal input, and the user can either select from the available options or input their own data for the additional arguments in the function:

-

visual: the visual system to be used. Available inbuilt options are detailed above, or the user may include their own dataframe, with the first column being the wavelength range and the following columns being the absorbance at each wavelength for each cone type (see below for an example). -

achromatic: Either a receptor’s sensitivity data (available options include the blue tit, chicken, and starling double-cones, and the housefly’s R1-6 receptor), which can also be user-defined as above; or the longest-wavelength receptor, the sum of the two longest-wavelength receptors, or the sum of all receptors can be used. Alternatively,nonecan be specified for no achromatic stimulation calculation. -

illum: The illuminant being considered. By default, it considers an ideal white illuminant, but implemented options are a blue sky, standard daylight, and forest shade illuminants. A vector of same length as the wavelength range being considered can also be used as the input. -

trans: Models of the effects of light transmission (e.g. through noisy environments or ocular filters). The argument defaults toideal(i.e. no effect), though users can also use the built-in options ofbluetitorblackbirdto model the ocular transmission of blue tits/blackbirds, or specify a user-defined vector containing transmission spectra. -

qcatch: This argument determines what photon catch data should be returned-

Qi: The receptor quantum catches, calculated for receptor \(i\) as: \[Q_i = \int_\lambda{R_i(\lambda)S(\lambda)I(\lambda)d\lambda}\] Where \(\lambda\) denotes the wavelength, \(R_i(\lambda)\) the spectral sensitivity of receptor \(i\), \(S(\lambda)\) the reflectance spectrum of the colour, and \(I(\lambda)\) the illuminant spectrum. -

fi: The receptor quantum catches transformed according to Fechner’s law, in which the signal of the receptor is proportional to the logarithm of the quantum catch i.e. \(f_i = \ln(Q_i)\) -

Ei: the hyperbolic transform (a simplification of the Michaelis–Menten photoreceptor equation), where \[E_i = \frac{Q_i}{Q_i + 1}\].

-

-

bkg: The background being considered. By default, it considers an idealized background (i.e. wavelength-independent influence of the background on colour). A vector of same length as the wavelength range being considered can also be used as the input. -

vonkries: a logical argument which determines if the von Kries transformation (which normalizes receptor quantum catches to the background, thus accounting for receptor adaptation) is to be applied (defaults toFALSE). IfTRUE, \(Q_i\) is multiplied by a constant \(k\), which describes the von Kries transformation: \[k_i = \frac{1}{\int_\lambda R_i(\lambda)S^b(\lambda)I(\lambda)d\lambda}\] Where \(S^b\) denotes the reflectance spectra of the background. -

scale: This argument defines how the illuminant should be scaled. The scale of the illuminant is critical for receptor noise models in which the signal intensity influences the noise (see Receptor noise section, below). Illuminant curves should be in units of \(\mu mol.s^{-1}.m^{-2}\) in order to yield physiologically meaningful results. (Some software return illuminant information values in \(\mu Watt.cm^{-2}\), and must be converted to \(\mu mol.s^{-1}.m^{-2}\). This can be done by using theirrad2flux()andflux2irrad()functions.) Therefore, if the user-specified illuminant curves are not in these units (i.e. are measured proportional to a white standard, for example), thescaleparameter can be used as a multiplier to yield curves that are at least a reasonable approximation of the illuminant value. Commonly used values are 500 for dim conditions and 10,000 for bright conditions. -

relative: IfTRUE, it will make the cone stimulations relative to their sum. This is appropriate for general colourspace models(e.g. Goldsmith 1990; Stoddard and Prum 2008). For the receptor-noise model, however, it is important to setrelative = FALSE.

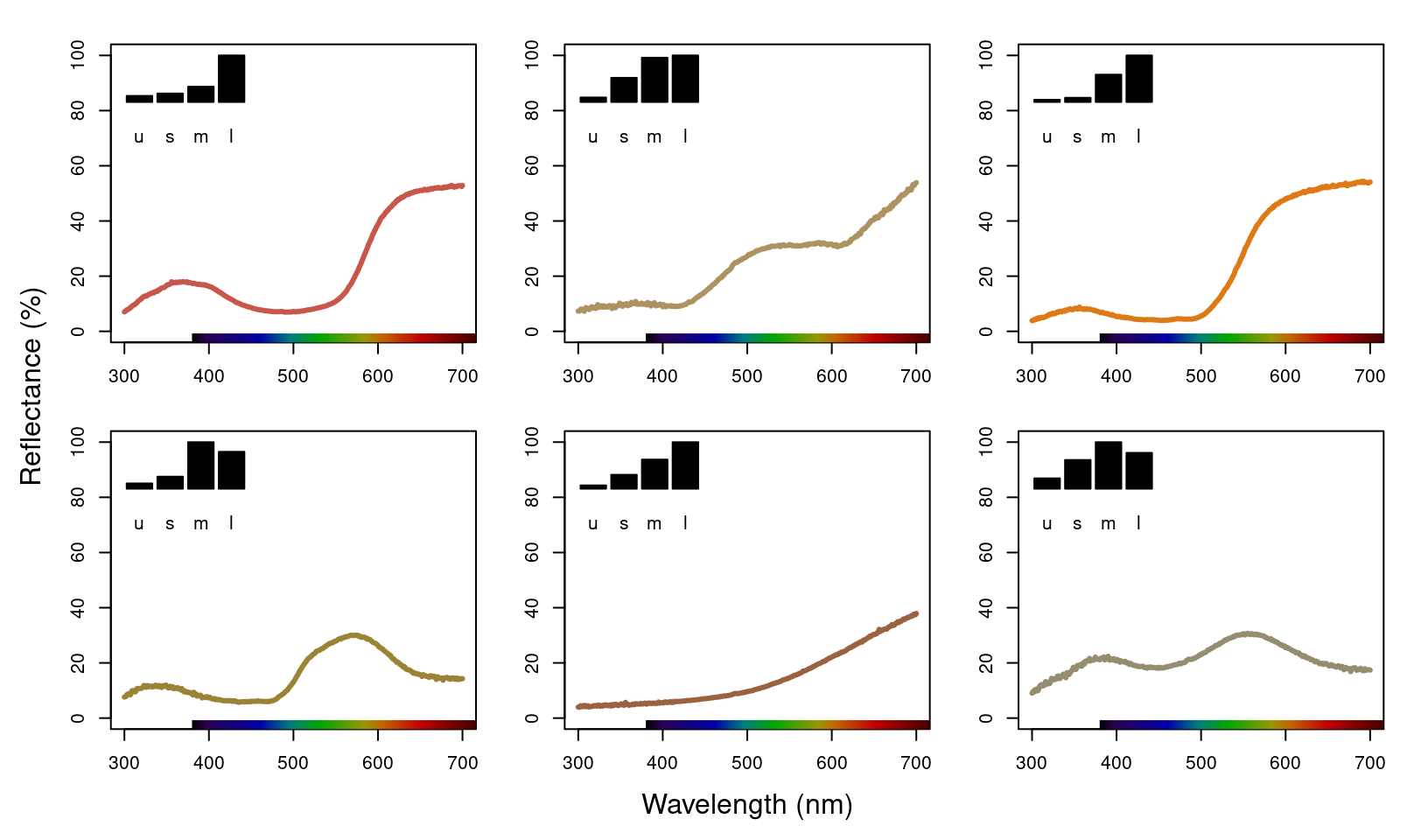

All visual models begin with the estimation of receptor quantum catches. The requirements of models may differ significantly of course, so be sure to consult the function documentation and original publications. For this example, we will use the average reflectance of the different species to calculate the raw stimulation of retinal cones, considering the avian average UV visual system, a standard daylight illumination, and an idealized background.

vismod1 <- vismodel(sppspec,

visual = "avg.uv", achromatic = "bt.dc",

illum = "D65", relative = FALSE

)Since there are multiple parameters that can be used to customize the output of vismodel(), as detailed above, for convenience these can be returned by using summary in a vismodel object:

summary(vismod1)#> visual model options:

#> * Quantal catch: Qi

#> * Visual system, chromatic: avg.uv

#> * Visual system, achromatic: bt.dc

#> * Illuminant: D65, scale = 1 (von Kries colour correction not applied)

#> * Background: ideal

#> * Transmission: ideal

#> * Relative: FALSE#> u s m

#> Min. :0.01671 Min. :0.04199 Min. :0.1325

#> 1st Qu.:0.02408 1st Qu.:0.06860 1st Qu.:0.1753

#> Median :0.03039 Median :0.07148 Median :0.2675

#> Mean :0.03599 Mean :0.09924 Mean :0.2338

#> 3rd Qu.:0.04731 3rd Qu.:0.14265 3rd Qu.:0.2819

#> Max. :0.06353 Max. :0.17648 Max. :0.3037

#> l lum

#> Min. :0.2092 Min. :0.1513

#> 1st Qu.:0.2232 1st Qu.:0.1966

#> Median :0.2759 Median :0.2247

#> Mean :0.3076 Mean :0.2241

#> 3rd Qu.:0.3815 3rd Qu.:0.2661

#> Max. :0.4625 Max. :0.2766We can visualise what vismodel() is doing when estimating quantum catches by comparing the reflectance spectra to the estimates they are generating:

oldpar <- par(no.readonly = TRUE)

par(mfrow = c(2, 6), oma = c(3, 3, 0, 0))

layout(rbind(

c(2, 1, 4, 3, 6, 5),

c(1, 1, 3, 3, 5, 5),

c(8, 7, 10, 9, 12, 11),

c(7, 7, 9, 9, 11, 11)

))

sppspecol <- spec2rgb(sppspec)

for (i in 1:6) {

par(mar = c(2, 2, 2, 2))

plot(sppspec,

select = i + 1,

col = sppspecol,

lwd = 3,

ylim = c(0, 100)

)

par(mar = c(4.1, 2.5, 2.5, 2))

barplot(as.matrix(vismod1[i, 1:4]),

yaxt = "n",

col = "black"

)

}

mtext("Wavelength (nm)", side = 1, outer = TRUE, line = 1)

mtext("Reflectance (%)", side = 2, outer = TRUE, line = 1)

Figure 3.3: Plots of species mean reflectance curves with corresponding relative usml cone stimulations (insets).

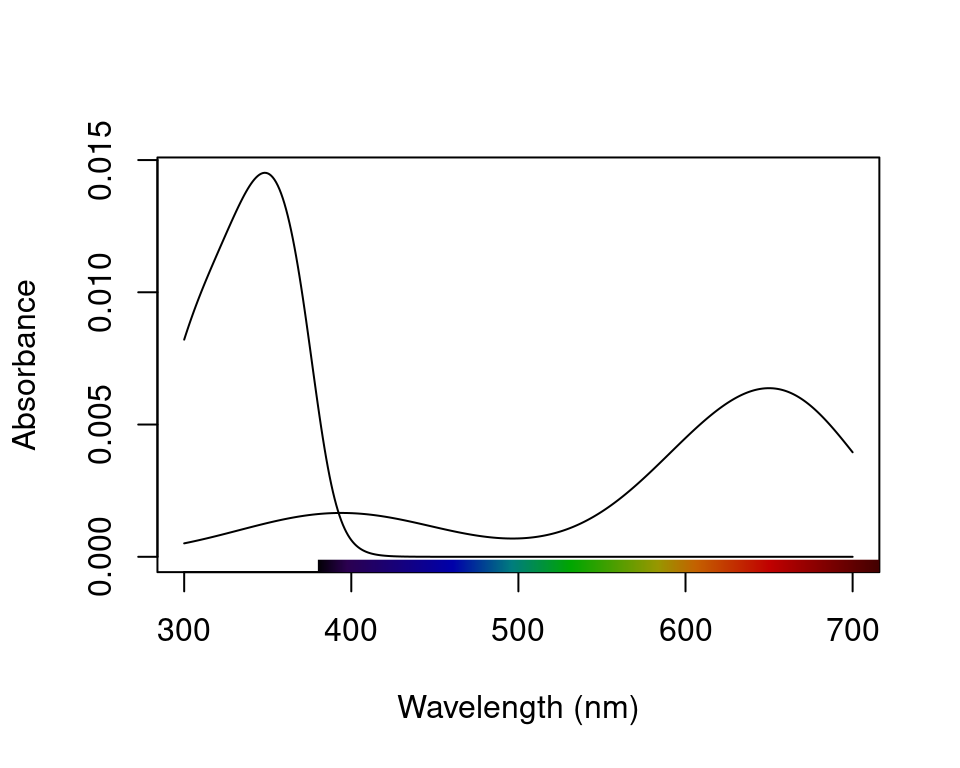

par(oldpar)As described above, vismodel also accepts user-defined visual systems, background and illuminants. We will illustrate this by showcasing the function sensmodel, which models spectral sensitivities of retinas based on their peak cone sensitivity, as described in Govardovskii et al. (2000) and Hart and Vorobyev (2005). sensmodel takes several optional arguments, but the main one is a vector containing the peak sensitivities for the cones being modelled. Let’s model an idealized dichromat visual system, with cones peaking in sensitivity at 350 and 650 nm:

Figure 3.4: The receptor sensitivities of an idealised dichromat created using sensmodel().

vismod.idi <- vismodel(sppspec,

visual = ideal_dichromat,

relative = FALSE

)

vismod.idi#> lmax350 lmax650 lum

#> cardinal 0.14578161 0.3519237 NA

#> jacana 0.09155090 0.3179246 NA

#> oriole 0.06981615 0.3775647 NA

#> parakeet 0.10579320 0.1727374 NA

#> robin 0.04727061 0.2174901 NA

#> tanager 0.16438304 0.2148739 NA3.1.4.5 The Receptor Noise Model

The receptor-noise limited (RNL) model of Vorobyev et al. (1998), Vorobyev et al. (2001) offers a basis for estimating the ‘perceptual’ distance between coloured stimuli (depending on available physiological and behavioural data), and assumes that the simultaneous discrimination of colours is fundamentally limited by photoreceptor noise. Colour distances under the RNL model can be calculated by using the inverse of the noise-to-signal ratio, known as the Weber fraction (\(w_i\) for each cone \(i\)). The Weber fraction can be calculated from the noise-to-signal ratio of cone \(i\) (\(v_i\)) and the relative number of receptor cells of type \(i\) within the receptor field (\(n_i\)):

\[w_i = \frac{v_i}{\sqrt{n_i}}\]

\(w_i\) is the value used for the noise when considering only neural noise mechanisms. Alternatively, the model can consider that the intensity of the colour signal itself contributes to the noise (photoreceptor, or quantum, noise). In this case, the noise for a receptor \(i\) is calculated as:

\[w_i = \sqrt{\frac{v_i^2}{\sqrt{n_i}} + \frac{2}{Q_a+Q_b}}\]

where \(a\) and \(b\) refer to the two colour signals being compared. Note that when the values of \(Q_a\) and \(Q_b\) are very high, the second portion of the equation tends to zero, and the both formulas should yield similar results. Hence, it is important that the quantum catch are calculated in the appropriate illuminant scale, as described above.

Colour distances are obtained by weighting the Euclidean distance of the photoreceptor quantum catches by the Weber fraction of the cones (\(\Delta S\)). The units of these measures are noise-weighted Euclidean distances measurements, and are often referred to as \(\Delta S\) for chromatic distances, and \(\Delta L\) for achromatic, or luminance, distances. The relevant calculations are:

For dichromats: \[\Delta S = \sqrt{\frac{(\Delta f_1 - \Delta f_2)^2}{w_1^2+w_2^2}}\]

For trichromats: \[\Delta S = \sqrt{\frac{w_1^2(\Delta f_3 - \Delta f_2)^2 + w_2^2(\Delta f_3 - \Delta f_1)^2 + w_3^2(\Delta f_1 - \Delta f_2)^2 }{ (w_1w_2)^2 + (w_1w_3)^2 + (w_2w_3)^2 }}\]

For tetrachromats: \[\Delta S = \sqrt{(w_1w_2)^2(\Delta f_4 - \Delta f_3)^2 + (w_1w_3)^2(\Delta f_4 - \Delta f_2)^2 + (w_1w_4)^2(\Delta f_3 - \Delta f_2)^2 + \\ (w_2w_3)^2(\Delta f_4 - \Delta f_1)^2 + (w_2w_4)^2(\Delta f_3 - \Delta f_1)^2 + (w_3w_4)^2(\Delta f_2 - \Delta f_1)^2 / \\ ((w_1w_2w_3)^2 + (w_1w_2w_4)^2 + (w_1w_3w_4)^2 + (w_2w_3w_4)^2)}\]

For the chromatic contrast. The achromatic contrast (\(\Delta L\)) can be calculated based on the double cone or the receptor (or combination of receptors) responsible for chromatic processing by the equation:

\[\Delta L = \frac{\Delta f}{w}\]

3.1.4.5.1 A Brief Note on Terminology and ‘Just Noticeable Differences’ (JNDs)

The receptor-noise limited model is often closely associated with the concept of ‘just noticeable differences’ (JNDs), to the point where JNDs are presented as the unit of distance or measurement between stimuli in this model/space. The JND is not unique to the receptor-noise limited model, however, nor is it accurately described as a unit of measurement. A JND is simply a contrast threshold which correlates with some discrimination criteria, such as 80% correct choices in a simultaneous-discrimination test, and empirical work continues to show that this correlation is often species-, sex-, individual-, condition-, and/or stimulus-specific. We are therefore supportive of the move in the literature toward divorcing the concept of JND’s from the receptor-noise model specifically and prefer the more accurate description of distances in receptor-noise space as ‘noise-weighted Euclidean distances’, abbreviated to \(\Delta S\) (for chromatic distances) and \(\Delta L\) (for achromatic distances). Specific noise-weighted Euclidean distances such as \(\Delta S = 1\) will indeed correlate with a threshold of discrimination, or a ‘just-noticeable difference’. The circumstances under which this holds (species, sex, viewing conditions, region of colourspace the stimulus occupies etc.) require behavioural validation, however, and the empirically estimated threshold under a given set of circumstances is unlikely to hold firm in the face of gross variation in such conditions.

3.1.4.5.2 Noise-weighted Distances with coldist() and bootcoldist()

pavo implements the noise-weighted colour distance calculations in the functions coldist() and bootcoldist(), assuming that raw receptor quantum catches are provided (via relative = FALSE in vismodel()). For the achromatic contrast, coldist() uses n4 to calculate \(w\) for the achromatic contrast. Note that even if \(Q_i\) is chosen, values are still log-transformed. This option is available in case the user wants to specify a data frame of quantum catches that was not generated by vismodel() as an input. In this case, the argument qcatch should be used to inform the function if \(Q_i\) or \(f_i\) values are being used (note that if the input to coldist() is an object generated using the vismodel() function, this argument is ignored.) The type of noise to be calculated can be selected from the coldist() argument noise (which accepts either "neural" or "quantum").

coldist(vismod1,

noise = "neural", achromatic = TRUE, n = c(1, 2, 2, 4),

weber = 0.1, weber.achro = 0.1

)#> patch1 patch2 dS dL

#> 1 cardinal jacana 8.436191 3.6082787

#> 2 cardinal oriole 7.874540 3.5245174

#> 3 cardinal parakeet 8.010167 0.7553289

#> 4 cardinal robin 5.865464 2.4275512

#> 5 cardinal tanager 10.116173 2.2442493

#> 6 jacana oriole 10.114671 0.0837613

#> 7 jacana parakeet 4.316712 2.8529498

#> 8 jacana robin 3.499380 6.0358299

#> 9 jacana tanager 5.064380 1.3640294

#> 10 oriole parakeet 8.147778 2.7691885

#> 11 oriole robin 7.200079 5.9520686

#> 12 oriole tanager 13.532690 1.2802681

#> 13 parakeet robin 4.744364 3.1828801

#> 14 parakeet tanager 5.886396 1.4889204

#> 15 robin tanager 7.679779 4.6718005#> patch1 patch2 dS dL

#> 1 cardinal jacana 2.0993283 NA

#> 2 cardinal oriole 4.6567462 NA

#> 3 cardinal parakeet 2.2575457 NA

#> 4 cardinal robin 3.7236780 NA

#> 5 cardinal tanager 3.5417700 NA

#> 6 jacana oriole 2.5574178 NA

#> 7 jacana parakeet 4.3568740 NA

#> 8 jacana robin 1.6243496 NA

#> 9 jacana tanager 5.6410983 NA

#> 10 oriole parakeet 6.9142918 NA

#> 11 oriole robin 0.9330682 NA

#> 12 oriole tanager 8.1985162 NA

#> 13 parakeet robin 5.9812236 NA

#> 14 parakeet tanager 1.2842244 NA

#> 15 robin tanager 7.2654480 NAWhere dS is the chromatic contrast (\(\Delta S\)) and dL is the achromatic contrast (\(\Delta L\)). Note that, by default, achromatic = FALSE, so dL isn’t included in the second result (this is to maintain consistency since, in the vismodel() function, the achromatic argument defaults to none). As expected, values are really high under the avian colour vision, since the colours of these species are quite different and because of the enhanced discriminatory ability with four compared to two cones.

coldist() also has a subset argument, which is useful if only certain comparisons are of interest (for example, of colour patches against a background, or only comparisons among a species or body patch). subset can be a vector of length one or two. If only one subsetting option is passed, all comparisons against the matching argument are returned (useful in the case of comparing to a background, for example). If two values are passed, comparisons will only be made between samples that match that rule (partial string matching and regular expressions are accepted). For example, compare:

coldist(vismod1, subset = "cardinal")#> patch1 patch2 dS dL

#> 1 cardinal jacana 8.436191 NA

#> 2 cardinal oriole 7.874540 NA

#> 3 cardinal parakeet 8.010167 NA

#> 4 cardinal robin 5.865464 NA

#> 5 cardinal tanager 10.116173 NAto:

#> patch1 patch2 dS dL

#> 1 cardinal jacana 8.436191 NAA bootstrap-based variant of coldist(), described in Maia and White (2018) as part of a more general analytical framework, is implemented via the function bootcoldist(). As the name suggests, bootcoldist() uses a bootstrap procedure to generate confidence intervals for the mean chromatic and/or achromatic distance between two or more samples of colours. This has the benefit of generating useful errors around distance estimates, and may also be inspected for the inclusion of an appropriate ‘threshold’, when asking common kinds of questions that relate to between-group differences (e.g. animals-vs-backgrounds, sexual dichromatism, colour polymorphism). The function’s arguments are shared with coldist() save for a few extra requirements. For one, the by argument is used to specify a grouping variable that defines the groups to be compared, while boot.n and alpha control the number of bootstrap replicates and the confidence level for the confidence interval, respectively. The latter two have sensible defaults, but the by argument is required.

Here we run present a quick example that tests for colour differences across three body regions (breast, crown, and throat) of the finch Sicalis citrina, given seven measures of each region from different males.

# Load the data

data(sicalis)

# Construct a model using an avian viewer

sicmod <- vismodel(sicalis, visual = "avg.uv", relative = FALSE)

# Create a grouping variable to group by body region,

# informed by the spectral data names

regions <- substr(rownames(sicmod), 6, 6)

# Estimate bootstrapped colour-distances

sicdist <- bootcoldist(sicmod,

by = regions,

n = c(1, 2, 2, 4),

weber = 0.05

)

# Take a look at the resulting pairwise estimates

sicdist#> dS.mean dS.lwr dS.upr

#> B-C 9.253097 5.7015179 14.013095

#> B-T 3.483528 0.3728945 9.509223

#> C-T 12.221038 8.1736179 16.899949And plot the results for good measure. We can see that the pairwise comparisons of the chest, breast, and throat are quite chromatically distinct, but the breast and throat are much more similar in colour, perhaps to the point of being indistinguishable.

plot(sicdist[, 1],

ylim = c(0, 20),

pch = 21,

bg = 1,

cex = 2,

xaxt = "n",

xlab = "Centroid comparison",

ylab = "Chromatic contrast (dS)"

)

axis(1, at = 1:3, labels = rownames(sicdist))

segments(1:3, sicdist[, 2], 1:3, sicdist[, 3], lwd = 2) # Add CI's

abline(h = 1, lty = 3, lwd = 2) # Add a 'threshold' line at dS = 1

Figure 3.5: The bootstrapped colour distances between the throat, chest, and breast regions of male Sicalis citrina.

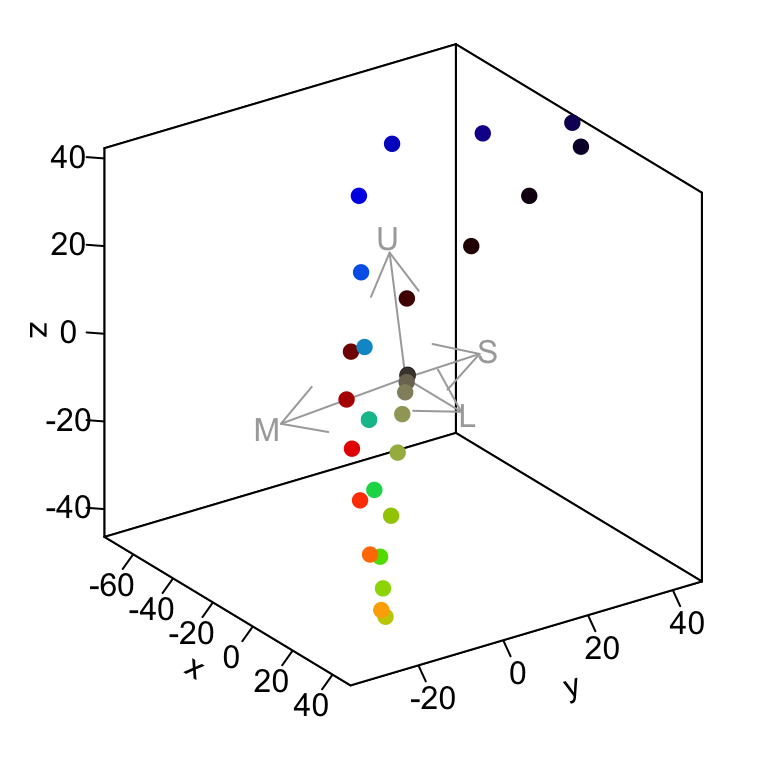

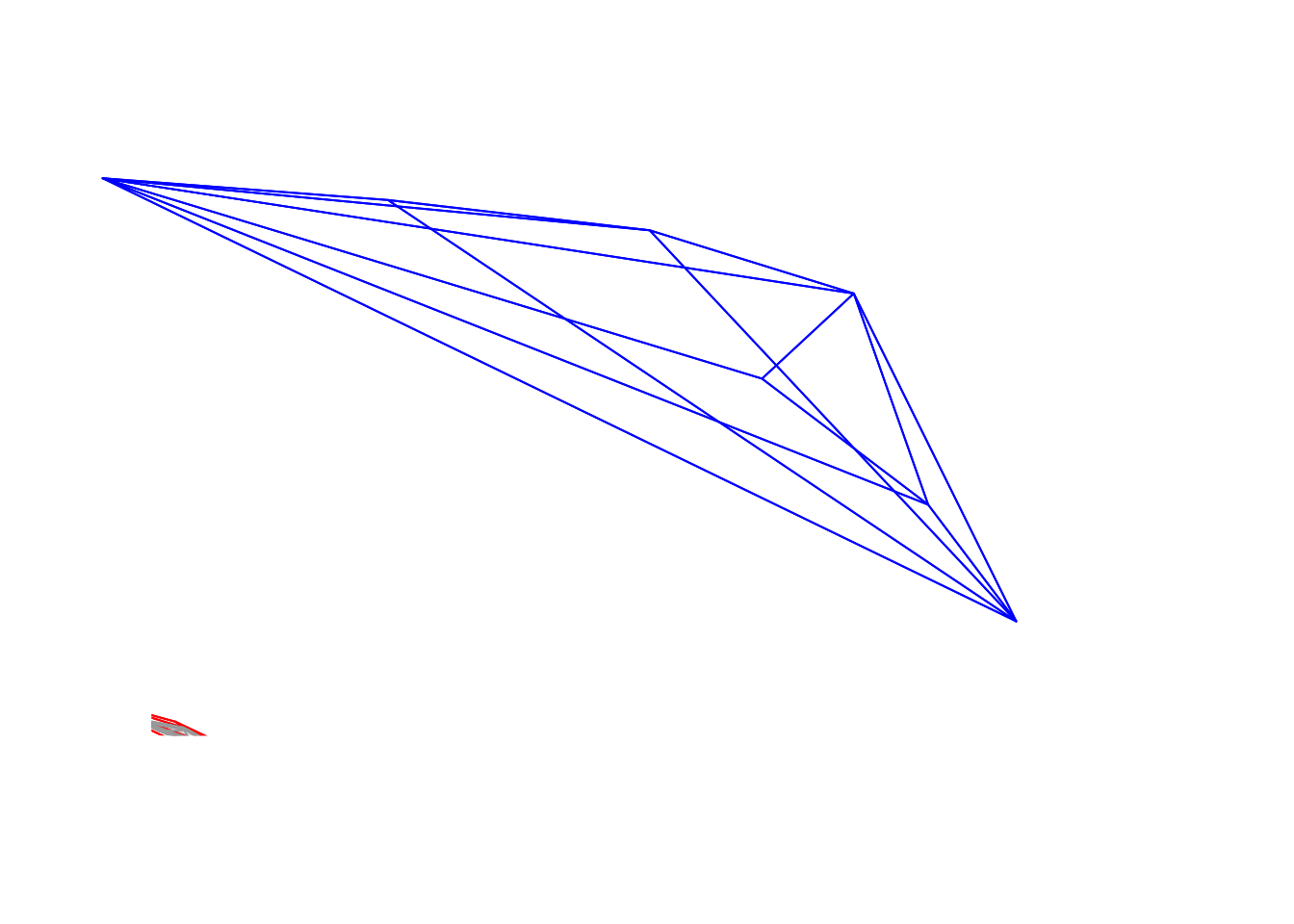

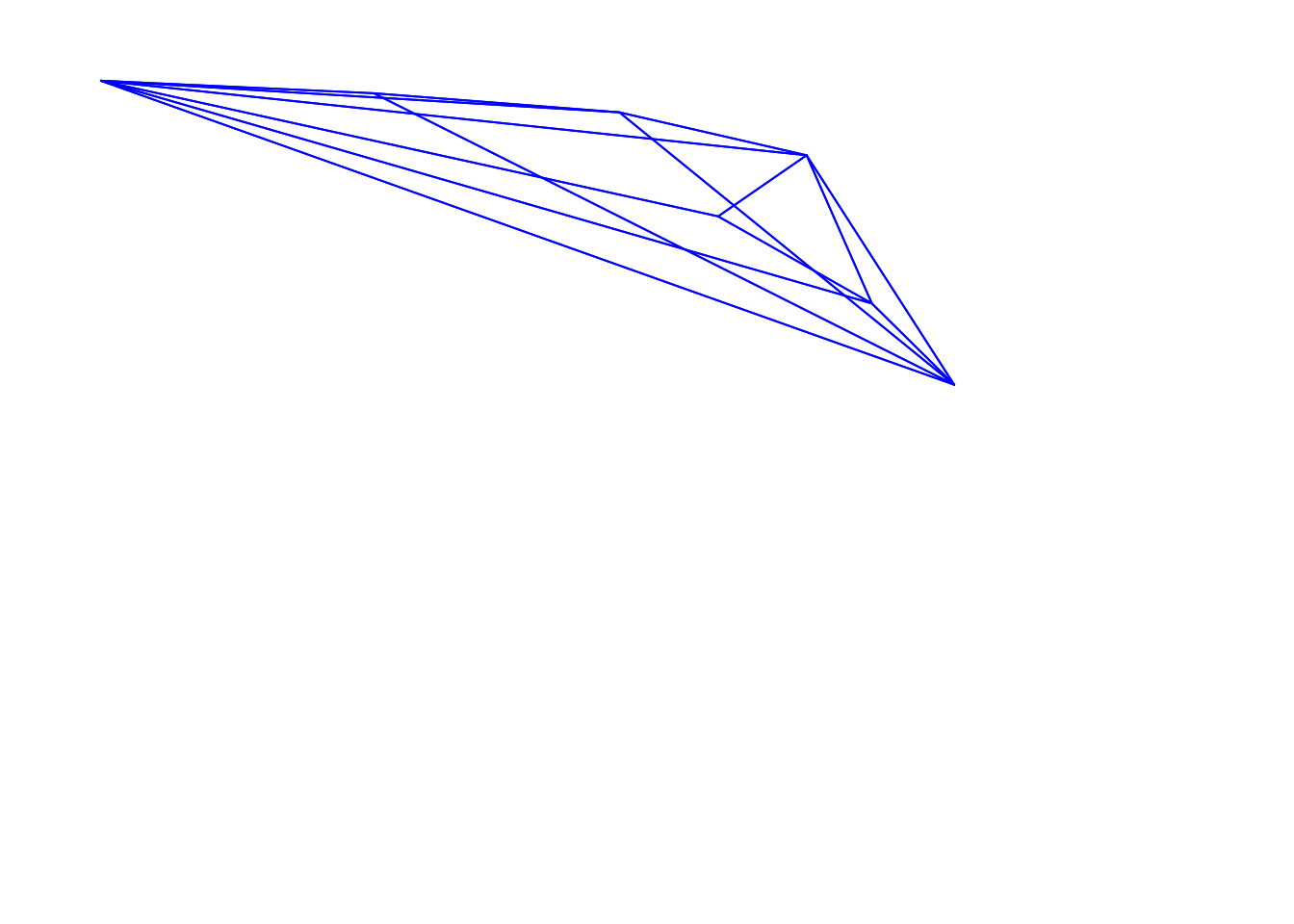

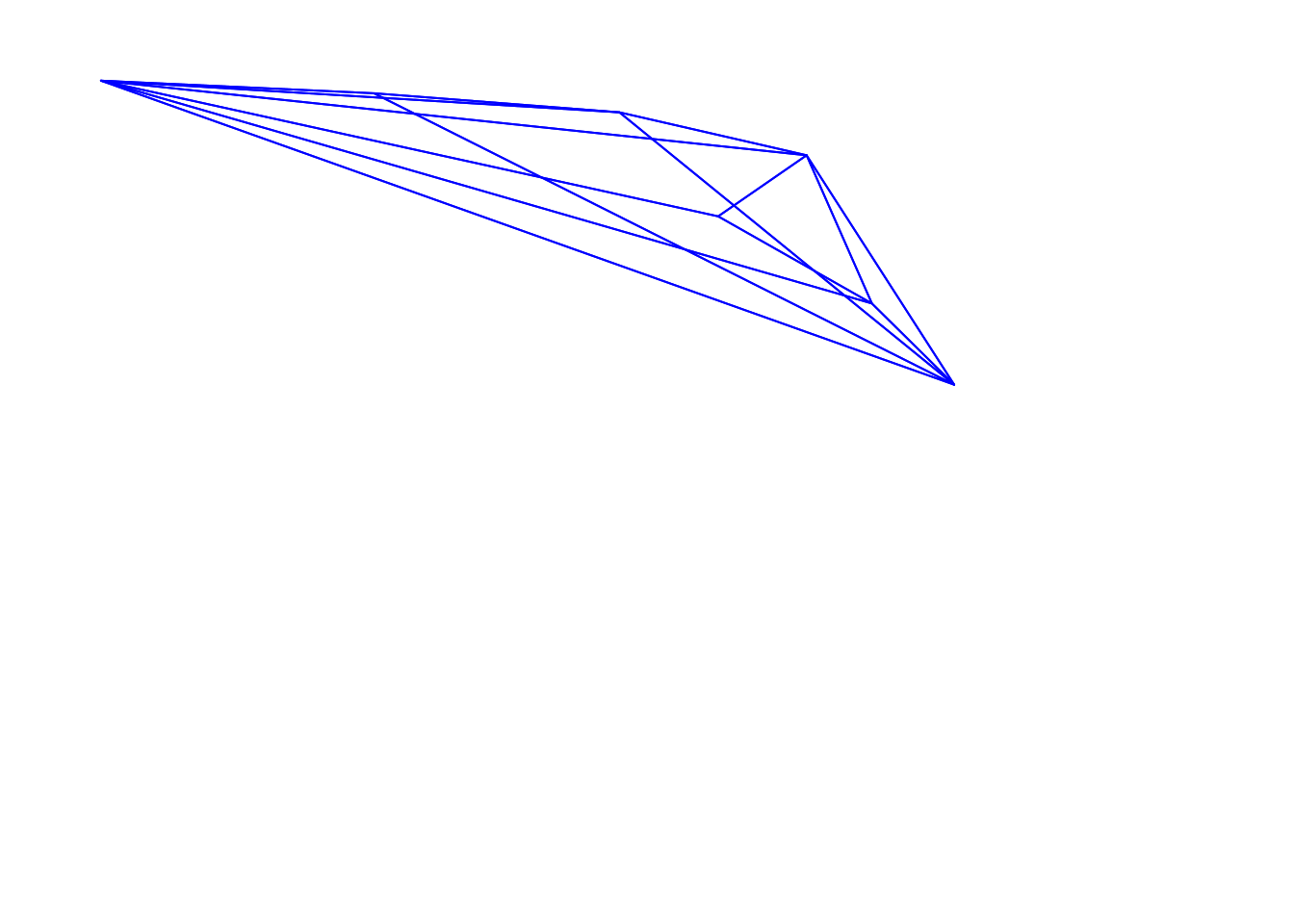

3.1.4.5.3 Converting receptor noise-corrected distances to Cartesian coordinates

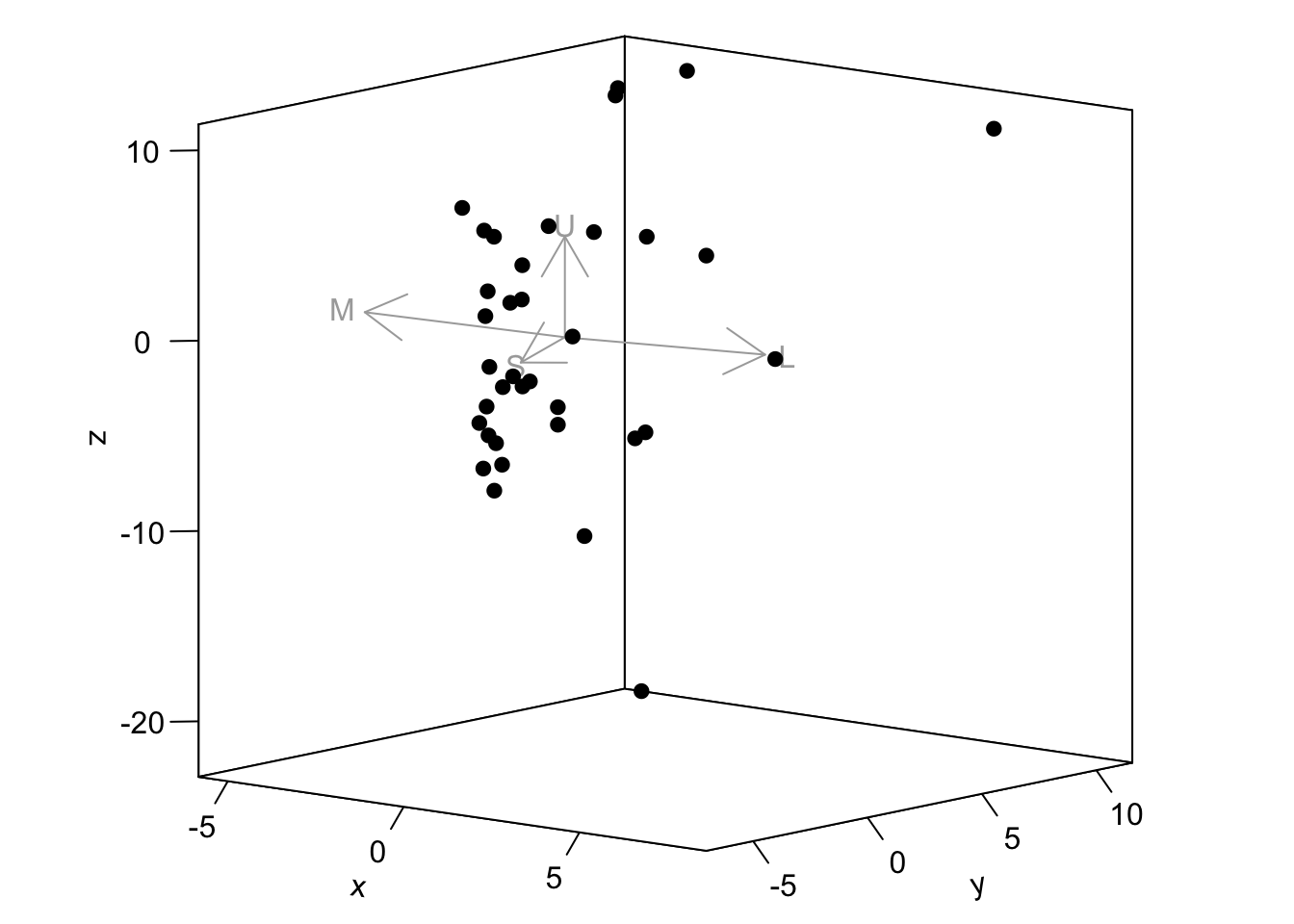

You can convert noise-corrected Euclidean distances to coordinates in Cartesian ‘noise-corrected space’ with the function jnd2xyz(). In this space, the distance between points corresponds exactly to their noise-corrected Euclidean distance as estimated under the receptor-noise limited model. Note that the absolute position of these points in the XYZ space is arbitrary, however, therefore the function allows you to rotate the data so that, for example, the vector leading to the long-wavelength cone aligns with the X axis:

fakedata1 <- vapply(

seq(100, 500, by = 20),

function(x) {

rowSums(cbind(

dnorm(300:700, x, 30),

dnorm(300:700, x + 400, 30)

))

},

numeric(401)

)

# Creating idealized specs with varying saturation

fakedata2 <- vapply(

c(500, 300, 150, 105, 75, 55, 40, 30),

function(x) dnorm(300:700, 550, x),

numeric(401)

)

fakedata1 <- as.rspec(data.frame(wl = 300:700, fakedata1))

fakedata1 <- procspec(fakedata1, "max")

fakedata2 <- as.rspec(data.frame(wl = 300:700, fakedata2))

fakedata2 <- procspec(fakedata2, "sum")

fakedata2 <- procspec(fakedata2, "min")

# Converting reflectance to percentage

fakedata1[, -1] <- fakedata1[, -1] * 100

fakedata2[, -1] <- fakedata2[, -1] / max(fakedata2[, -1]) * 100

# Combining and converting to rspec

fakedata.c <- data.frame(

wl = 300:700,

fakedata1[, -1],

fakedata2[, -1]

)

fakedata.c <- as.rspec(fakedata.c)

# Visual model and colour distances

fakedata.vm <- vismodel(fakedata.c,

relative = FALSE,

achromatic = "all"

)

fakedata.cd <- coldist(fakedata.vm,

noise = "neural", n = c(1, 2, 2, 4),

weber = 0.1, achromatic = TRUE

)

# Converting to Cartesian coordinates

fakedata.cc <- jnd2xyz(fakedata.cd,

ref1 = "l",

axis1 = c(1, 0, 0),

ref2 = NULL

)

head(fakedata.cc)#> x y z lum

#> X1 -26.165302 3.692783 -17.71718 1.430788

#> X2 -11.212399 -2.085407 -27.97937 2.315951

#> X3 3.076291 -7.441594 -37.76761 3.621349

#> X4 16.523287 -12.475488 -46.33606 4.341465

#> X5 25.812150 -17.848152 -40.71621 4.444027

#> X6 31.912643 -23.368560 -24.79660 4.046863which you can then plot as well:

Figure 3.6: Spectral data in a receptor noise-corrected colourspace

The axes in this colourspace denote noise-corrected Euclidean distances. For more information on these functions, see ?jnd2xyz, ?jndrot and ?jndplot.

3.1.4.6 Colourspaces

Another general, flexible way in which to represent stimuli is via a colourspace model. Under such models photon catches are expressed in relative values (so that the quantum catches of all receptors involved in chromatic discrimination sum to 1). The maximum stimulation of each cone \(n\) is placed at the vertex of a \((n-1)\)-dimensional polygon that encompasses all theoretical colours that can be perceived by that visual system. For the avian visual system comprised of four cones, for example, all colours can be placed somewhere in the volume of a tetrahedron, in which each of the four vertices represents the maximum stimulation of that particular cone type.

Though these models do not account for receptor noise (and thus do not allow an estimate of noise-weighted distances), they presents several advantages. First, they make for a very intuitive representation of colour points accounting for attributes of the colour vision of the signal receiver. Second, they allow for the calculation of several interesting variables that represent colour. For example, hue can be estimated from the angle of the point relative to the xy plane (blue-green-red) and the z axis (UV); saturation can be estimated as the distance of the point from the achromatic centre.

data(flowers) is a new example dataset consisting of reflectance spectra from 36 Australian angiosperm species, which we’ll use to illustrate for the following colourspace modelling.

#> wl Goodenia_heterophylla Goodenia_geniculata Goodenia_gracilis

#> 1 300 1.7426387 1.918962 0.3638354

#> 2 301 1.5724849 1.872966 0.3501921

#> 3 302 1.4099808 1.828019 0.3366520

#> 4 303 1.2547709 1.784152 0.3231911

#> 5 304 1.1064997 1.741398 0.3097854

#> 6 305 0.9648117 1.699792 0.29641073.1.4.6.1 Di-, Tri-, and Tetrachromatic Colourspaces

pavo has extensive modelling and visualisation capabilities for generic di-, tri-, and tetra-chromatic spaces, uniting these approaches in a cohesive workflow. As with most colourspace models, we first estimate relative quantum catches with various assumptions by using the vismodel() function, before converting each set of values to a location in colourspace by using the space argument in colspace() (the function can also be set to try to detect the dimensionality of the colourspace automatically). For di- tri- and tetrachromatic spaces, colspace() calculates the coordinates of stimuli as:

Dichromats: \[x = \frac{1}{\sqrt{2}}(Q_l - Q_s)\]

Trichromats:

\[ \begin{split} x &= \frac{1}{\sqrt{2}}(Q_l - Q_m) \\ y &= \frac{\sqrt{2}}{\sqrt{3}}(Q_s - \frac{Q_l + Q_m}{2}) \end{split} \] Tetrachromats:

\[ \begin{split} x &= \frac{1}{\sqrt{2}}(Q_l - Q_m) \\ y &= \frac{\sqrt{2}}{\sqrt{3}}(Q_s - \frac{Q_l + Q_m}{2}) z &= \frac{\sqrt{3}}{2}(Q_u - \frac{Q_l + Q_m + Q_s}{3}) \end{split} \]

Where \(Q_u\), \(Q_s\), \(Q_m\), and \(Q_l\) refer to quantum catch estimates for UV-, short, medium-, and long-wavelength photoreceptors, respectively.

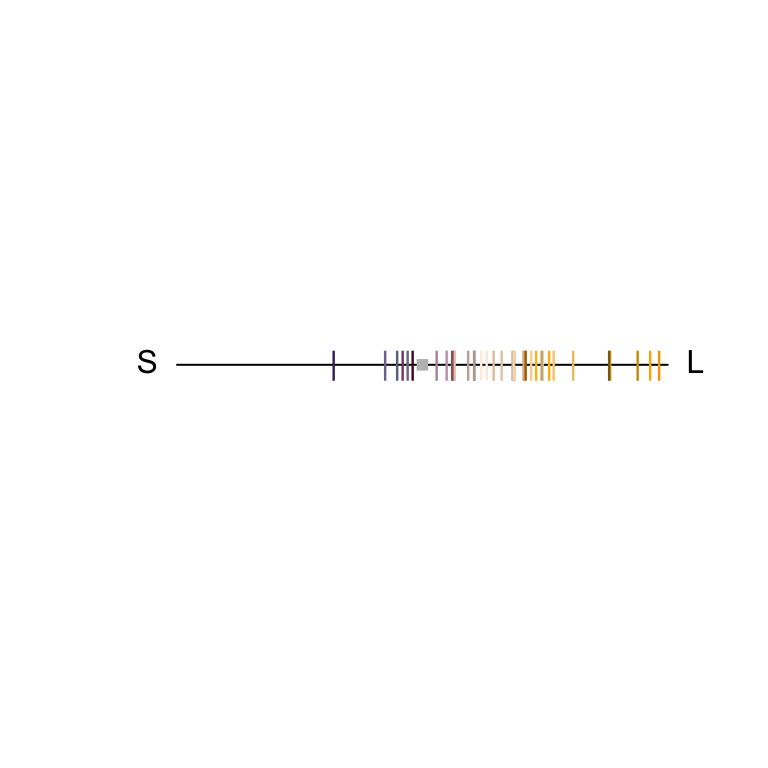

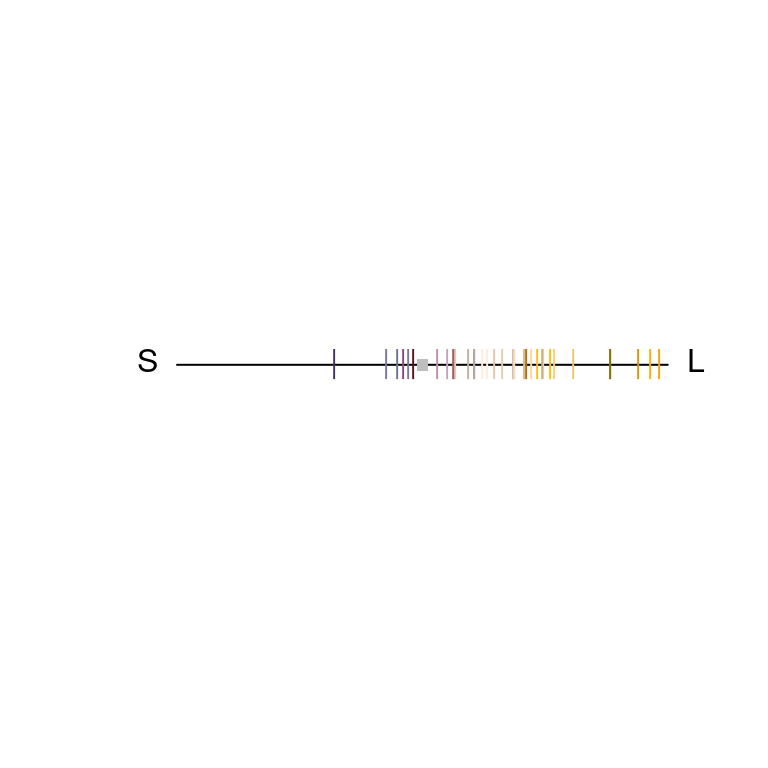

For a dichromatic example, we can model our floral reflectance data using the visual system of the domestic dog Canis familiaris, which has two cones with maximal sensitivity near 440 and 560 nm.

vis.flowers <- vismodel(flowers, visual = "canis")

di.flowers <- colspace(vis.flowers, space = "di")

head(di.flowers)#> s l x r.vec

#> Goodenia_heterophylla 0.52954393 0.4704561 -0.04178143 0.04178143

#> Goodenia_geniculata 0.25430150 0.7456985 0.34747015 0.34747015

#> Goodenia_gracilis 0.01747832 0.9825217 0.68238870 0.68238870

#> Xyris_operculata 0.39433933 0.6056607 0.14942675 0.14942675

#> Eucalyptus_sp 0.40628552 0.5937145 0.13253229 0.13253229

#> Faradaya_splendida 0.23166580 0.7683342 0.37948187 0.37948187

#> lum

#> Goodenia_heterophylla NA

#> Goodenia_geniculata NA

#> Goodenia_gracilis NA

#> Xyris_operculata NA

#> Eucalyptus_sp NA

#> Faradaya_splendida NAThe output contains values for the relative stimulation of short- and long-wavelength sensitive photoreceptors associated with each flower, along with its single coordinate in dichromatic space and its r.vector (distance from the origin). To visualise where these points lie, we can simply plot them on a segment.

Figure 3.7: Flowers in a dichromatic colourspace, as modelled according to a canid visual system.

For our trichromatic viewer we can use the honeybee Apis mellifera, one of the most significant and widespread pollinators. We’ll also transform our quantum catches according to Fechner’s law by specifying qcatch = 'fi', and will model photoreceptor stimulation under bright conditions by scaling our illuminant with the scale argument.

vis.flowers <- vismodel(flowers,

visual = "apis",

qcatch = "fi",

scale = 10000

)

tri.flowers <- colspace(vis.flowers, space = "tri")

head(tri.flowers)#> s m l x

#> Goodenia_heterophylla 0.2882383 0.3587254 0.3530363 -0.004022828

#> Goodenia_geniculata 0.2475326 0.3549832 0.3974842 0.030052720

#> Goodenia_gracilis 0.1373117 0.3125304 0.5501579 0.168028001

#> Xyris_operculata 0.2395035 0.3729820 0.3875145 0.010275990

#> Eucalyptus_sp 0.2760091 0.3548096 0.3691813 0.010162377

#> Faradaya_splendida 0.2654951 0.3431266 0.3913783 0.034119147

#> y h.theta r.vec lum

#> Goodenia_heterophylla -0.05522995 -1.6435057 0.05537626 NA

#> Goodenia_geniculata -0.10508396 -1.2922439 0.10929687 NA

#> Goodenia_gracilis -0.24007647 -0.9601417 0.29303604 NA

#> Xyris_operculata -0.11491764 -1.4816131 0.11537616 NA

#> Eucalyptus_sp -0.07020755 -1.4270471 0.07093922 NA

#> Faradaya_splendida -0.08308452 -1.1811377 0.08981733 NAAs in the case of our dichromat, the output contains relative photoreceptor stimulations, coordinates in the Maxwell triangle, as well as the ‘hue angle’ h.theta and distance from the origin (r.vec).

Figure 3.8: Floral reflectance in a Maxwell triangle, considering a honeybee visual system.

Finally, we’ll draw on the blue tit’s visual system to model our floral reflectance spectra in a tetrahedral space, again using log-transformed quantum catches and assuming bright viewing conditions.

vis.flowers <- vismodel(flowers,

visual = "bluetit",

qcatch = "fi",

scale = 10000

)

tetra.flowers <- colspace(vis.flowers, space = "tcs")

head(tetra.flowers)#> u s m l

#> Goodenia_heterophylla 0.2274310 0.2659669 0.2524170 0.2541851

#> Goodenia_geniculata 0.1625182 0.2721621 0.2828016 0.2825182

#> Goodenia_gracilis 0.0428552 0.2443091 0.3504414 0.3623943

#> Xyris_operculata 0.1766234 0.2709785 0.2642637 0.2881344

#> Eucalyptus_sp 0.2118481 0.2609468 0.2637023 0.2635028

#> Faradaya_splendida 0.1844183 0.2557660 0.2786393 0.2811764

#> u.r s.r m.r

#> Goodenia_heterophylla -0.02256897 0.015966893 0.002417012

#> Goodenia_geniculata -0.08748184 0.022162064 0.032801599

#> Goodenia_gracilis -0.20714480 -0.005690920 0.100441373

#> Xyris_operculata -0.07337659 0.020978454 0.014263689

#> Eucalyptus_sp -0.03815190 0.010946788 0.013702317

#> Faradaya_splendida -0.06558169 0.005765955 0.028639328

#> l.r x y

#> Goodenia_heterophylla 0.004185066 -0.007214866 -0.005415708

#> Goodenia_geniculata 0.032518179 0.006341799 0.003861848

#> Goodenia_gracilis 0.112394348 0.072312163 0.033297418

#> Xyris_operculata 0.038134446 0.010505857 -0.010813615

#> Eucalyptus_sp 0.013502794 0.001565228 0.001044768

#> Faradaya_splendida 0.031176408 0.015560661 0.007189965

#> z h.theta h.phi r.vec

#> Goodenia_heterophylla -0.02256897 -2.4976873 -1.190529 0.02430520

#> Goodenia_geniculata -0.08748184 0.5469755 -1.486123 0.08779638

#> Goodenia_gracilis -0.20714480 0.4315247 -1.203879 0.22191605

#> Xyris_operculata -0.07337659 -0.7998327 -1.368146 0.07490949

#> Eucalyptus_sp -0.03815190 0.5885699 -1.521510 0.03819828

#> Faradaya_splendida -0.06558169 0.4328380 -1.315140 0.06778487

#> r.max r.achieved lum

#> Goodenia_heterophylla 0.2692325 0.09027589 NA

#> Goodenia_geniculata 0.2508989 0.34992737 NA

#> Goodenia_gracilis 0.2678272 0.82857920 NA

#> Xyris_operculata 0.2552227 0.29350636 NA

#> Eucalyptus_sp 0.2503039 0.15260760 NA

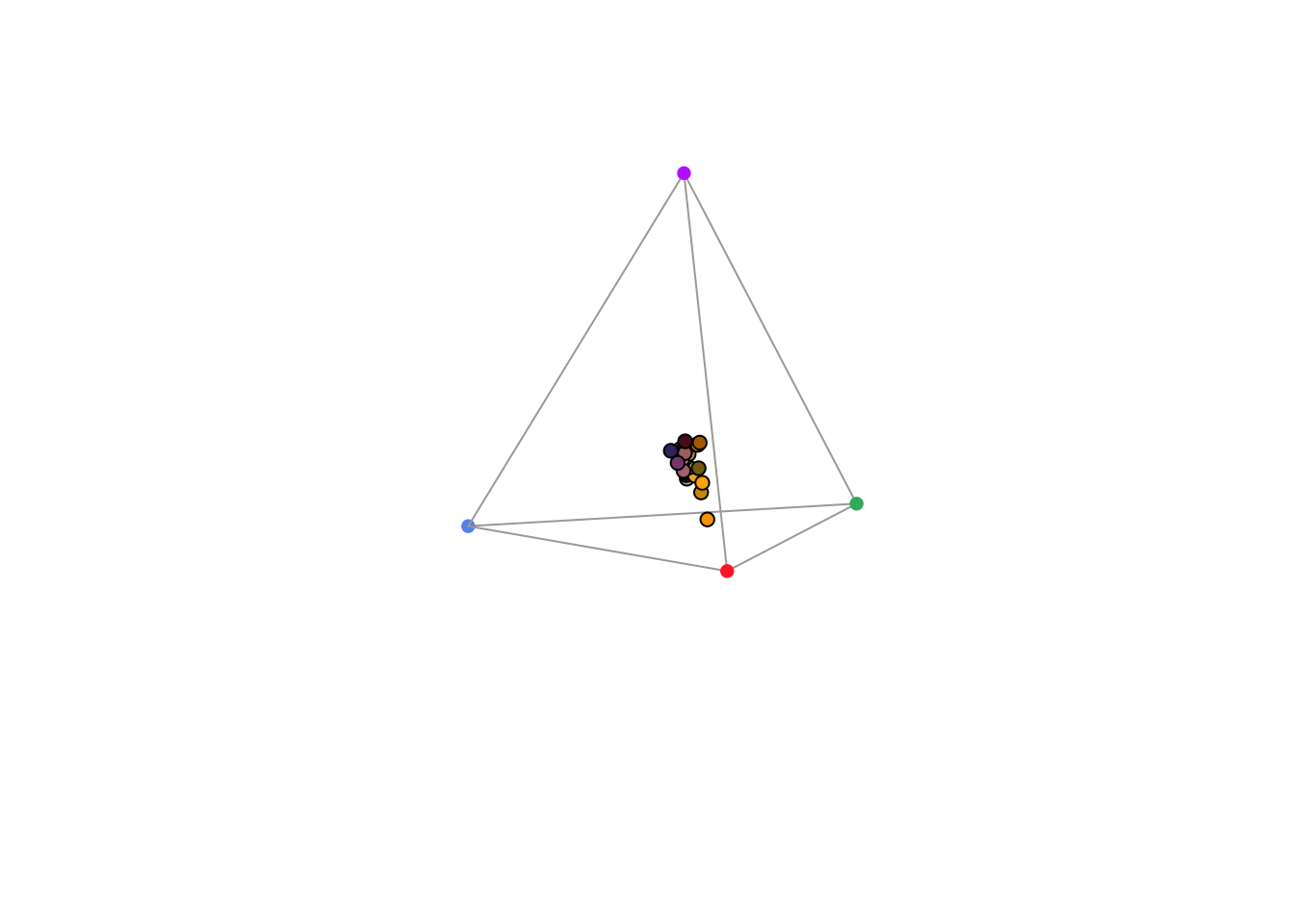

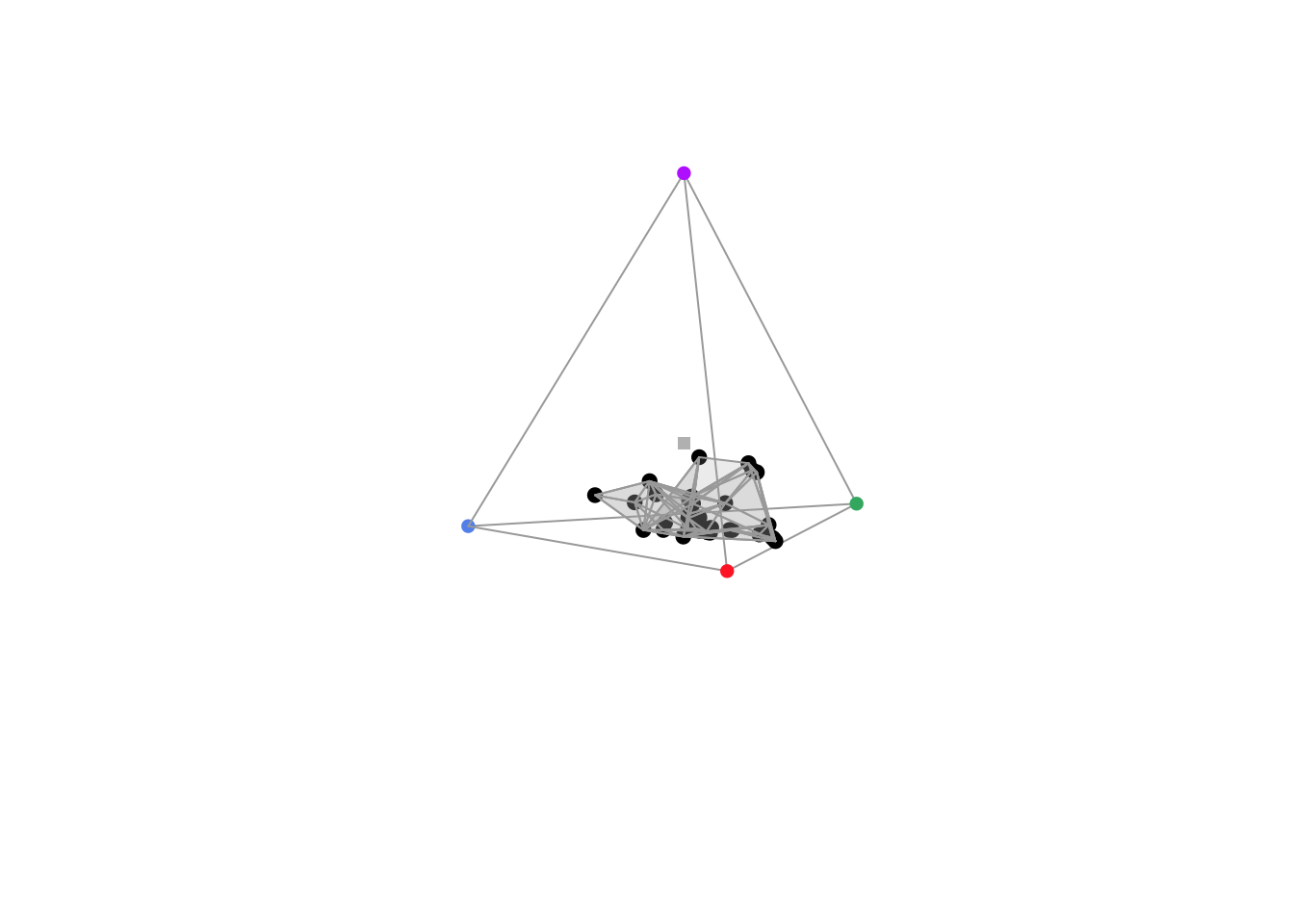

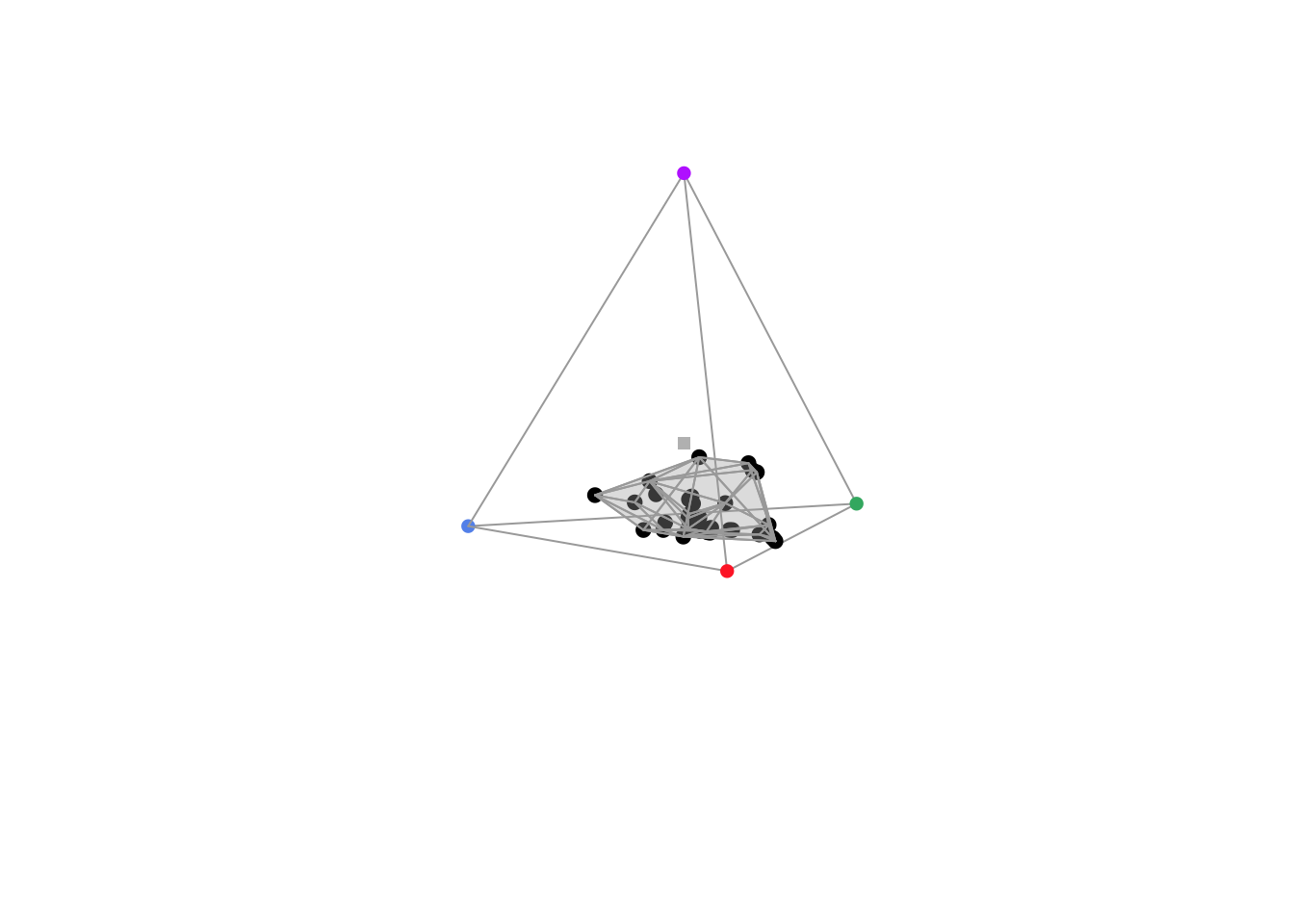

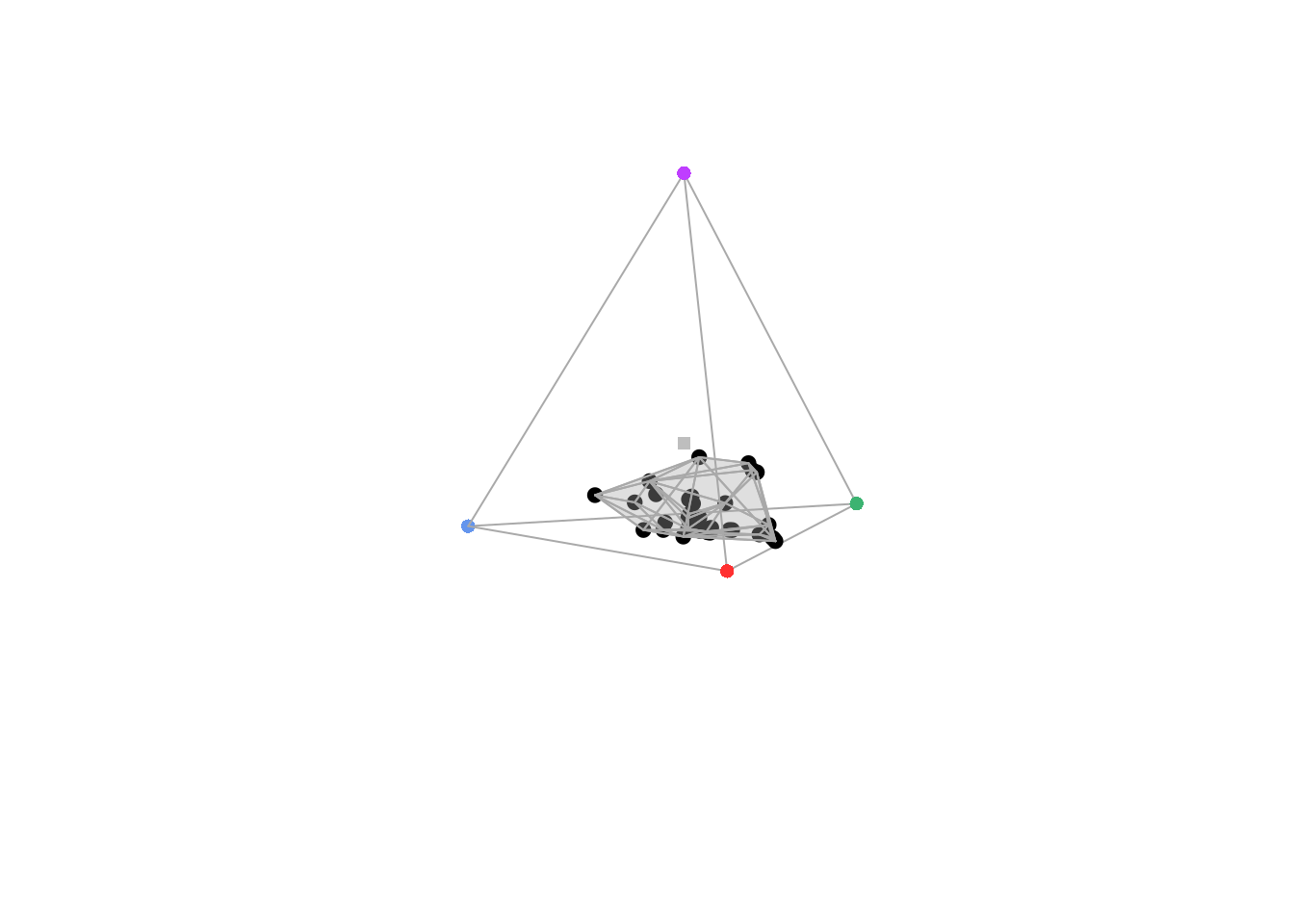

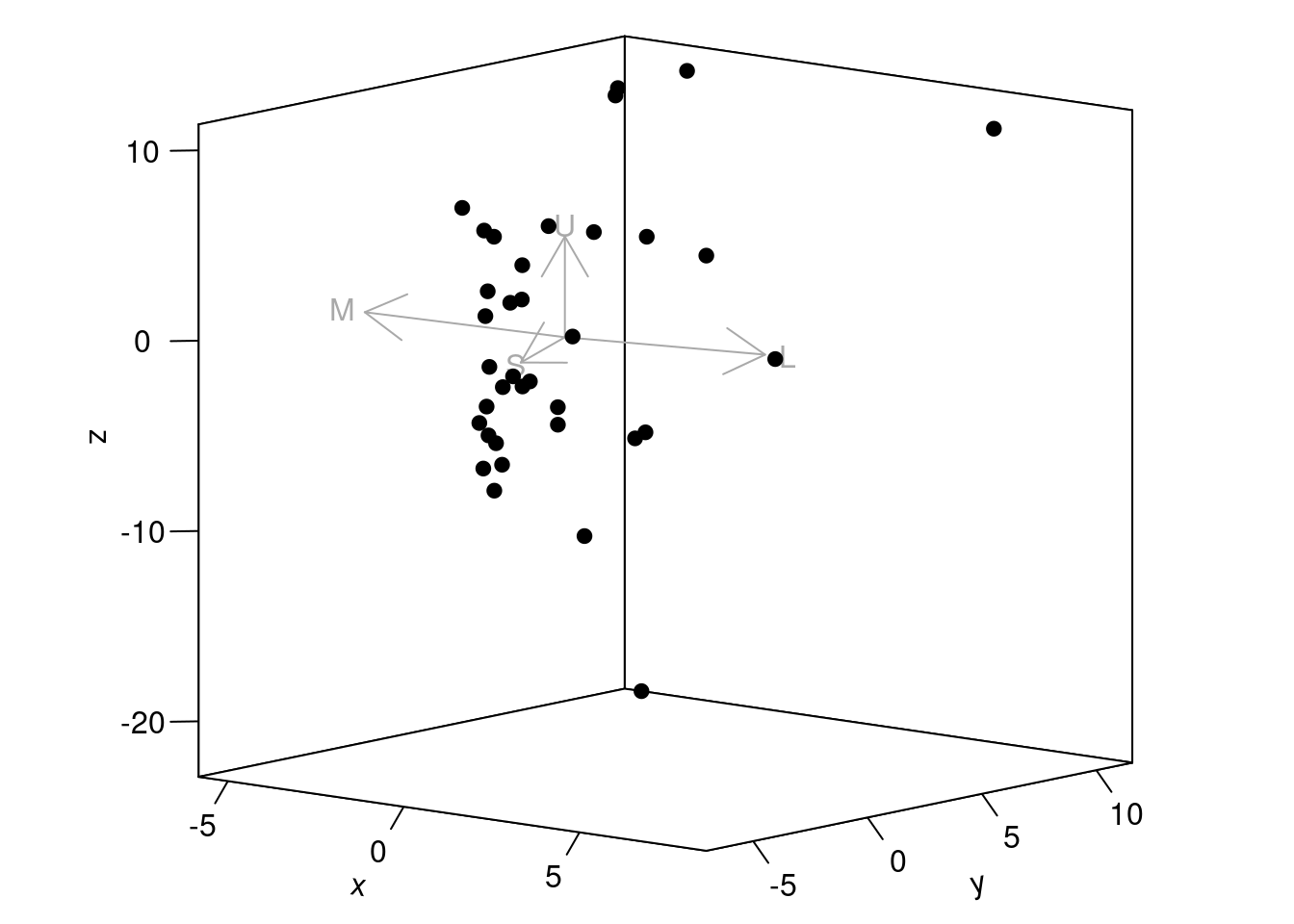

#> Faradaya_splendida 0.2583986 0.26232676 NATetrahedral data (via colspace(space = 'tcs')) may be plotted in a standard static tetrahedron by using plot(), or can be visualised as part of an interactive tetrahedron by using tcsplot() with the accessory functions tcspoints() and tcsvol() for adding points and convex hulls, respectively. As with other colourspace plots there are a number of associated graphical options, though the theta and phi arguments are particularly useful in this case, as they control the orientation (in degrees) of the tetrahedron in the xy and yz planes, respectively.

When plotting the tetrahedral colourspace, one can also force perspective by changing the size of points relative to their distance from the plane of observation, using the arguments perspective = TRUE and controlling the size range with the range argument. Several other options control the appearance of this plot, you can check these using ?plot.colspace or ?tetraplot

plot(tetra.flowers,

pch = 21,

bg = spec2rgb(flowers),

perspective = TRUE,

range = c(1, 2),

cex = 0.5

)

Figure 3.9: Flowers in a tetrahedral colourspace modelled using the visual phenotype of the blue tit. Point size is used to force perspective

Two additional functions may help with tetrahedral colourspace plotting:

-

axistetra()function can be used to draw arrows showing the direction and magnitude of distortion of x, y and z in the tetrahedral plot. -

legendtetra()allows you to add a legend to a plot.

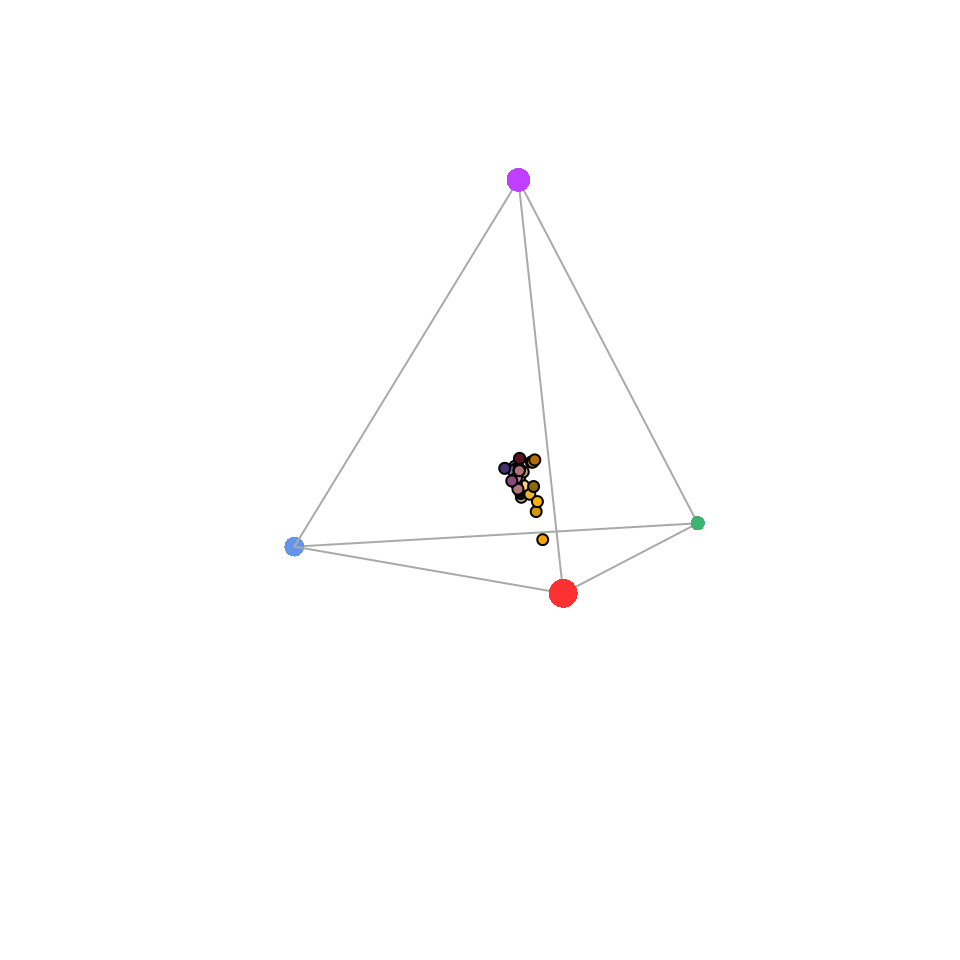

plot(tetra.flowers,

pch = 21,

bg = spec2rgb(flowers)

)

axistetra(x = 0, y = 1.8)

plot(tetra.flowers,

theta = 110,

phi = 10,

pch = 21,

bg = spec2rgb(flowers)

)

axistetra(x = 0, y = 1.8)

Figure 3.10: Flowers in a tetrahedral colourspace, with varied orientations and perspectives, modelled using the visual phenotype of the blue tit.

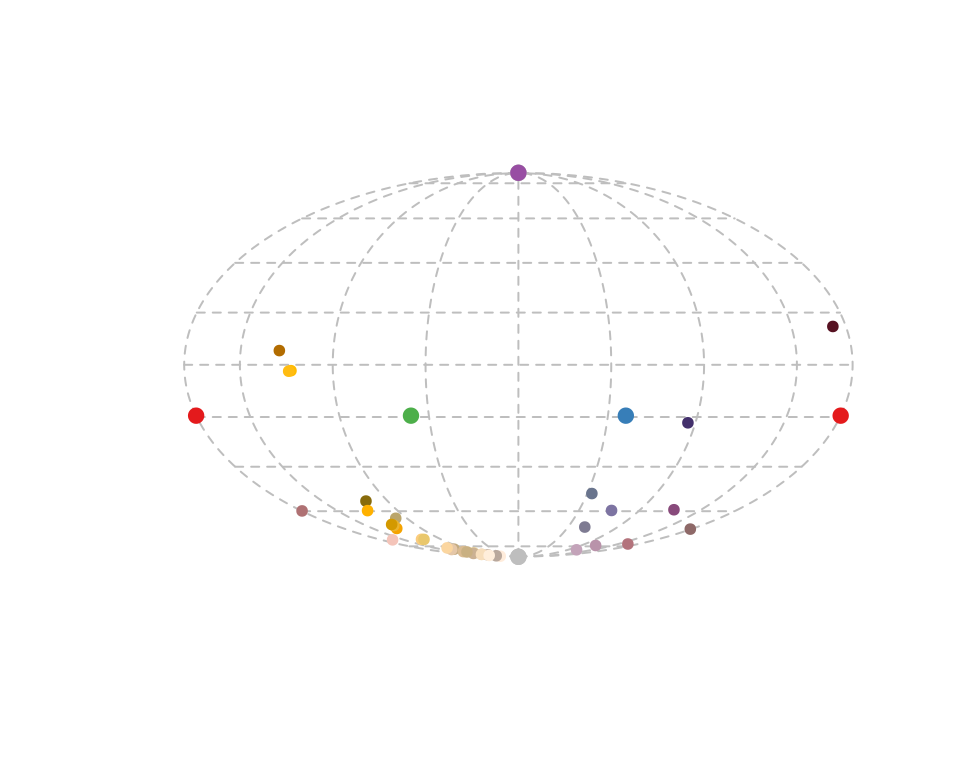

For tetrahedral models, another plotting option available is projplot, which projects colour points in the surface of a sphere encompassing the tetrahedron. This plot is particularly useful to see differences in hue.

Figure 3.11: Projection plot from a tetrahedral colour space.

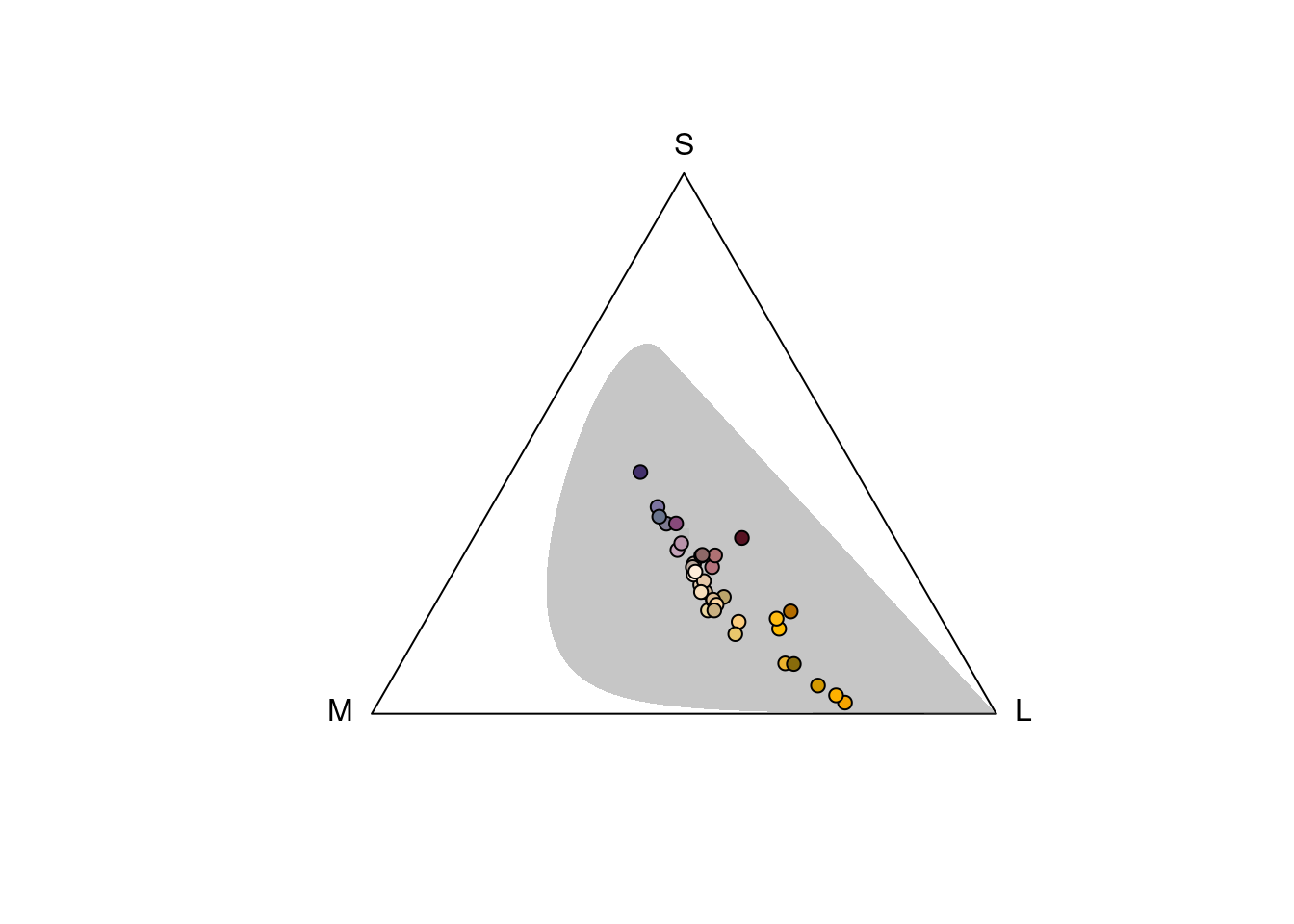

Estimating colour volumes

A further useful function for tri- and tetrahedral models is voloverlap(), which calculates the overlap in tri- or tetrahedral colour volume between two sets of points. This can be useful to explore whether different species occupy similar (overlapping) or different (non- overlapping) “sensory niches”, or to test for mimetism, dichromatism, etc. (Stoddard and Stevens 2011). To demonstrate, we will use the sicalis dataset, which includes measurements from the crown (C), throat (T) and breast (B) of seven stripe-tailed yellow finches (Sicalis citrina).

data(sicalis)We will use this dataset to test for the overlap between the volume determined by the measurements of those body parts from multiple individuals in the tetrahedral colourspace (note the option plot for plotting of the volumes):

tcs.sicalis.C <- subset(colspace(vismodel(sicalis)), "C")

tcs.sicalis.T <- subset(colspace(vismodel(sicalis)), "T")

tcs.sicalis.B <- subset(colspace(vismodel(sicalis)), "B")

voloverlap(tcs.sicalis.T, tcs.sicalis.B, plot = TRUE)#> vol1 vol2 overlapvol vsmallest vboth

#> 1 5.183721e-06 6.281511e-06 6.904074e-07 0.1331876 0.06407598

voloverlap(tcs.sicalis.T, tcs.sicalis.C, plot = TRUE)#> vol1 vol2 overlapvol vsmallest vboth

#> 1 5.183721e-06 4.739152e-06 0 0 0

The function voloverlap() gives the volume (\(V\)) of the convex hull delimited by the overlap between the two original volumes, and two proportions are calculated from that: \[\text{vsmallest} = V_{overlap} / \min(V_A, V_B)\] and \[\text{vboth} = V_{overlap} / (V_A + V_B)\]. Thus, if one of the volumes is entirely contained in the other, vsmallest will equal 1. So we can clearly see that there is overlap between the throat and breast colours (of about 6%), but not between the throat and the crown colours (Figures above).

Alternatively, \(\alpha\)-shapes are a new tool available in pavo to estimate colour volumes,

while allowing the presence of voids and pockets. \(\alpha\)-shapes may thus lead to a more

accurate measurement of “colourfulness” than convex hulls. For more information

on the theoretical background, please report to the related article (Gruson 2020).

You can plot the colour volume using \(\alpha\)-shape with the vol() function

(for non-interactive plots, tcsvol() otherwise) by specifying

type = "alpha". By default, this will use the \(\alpha^*\) value defined in

Gruson (2020).

data(flowers)

vis_flowers <- vismodel(flowers, visual = "avg.uv")

tcs_flowers <- colspace(vis_flowers)

plot(tcs_flowers)

vol(tcs_flowers, type = "alpha")#> Warning in rgl.init(initValue, onlyNULL): RGL: unable to open X11

#> display#> Warning: 'rgl.init' failed, running with 'rgl.useNULL = TRUE'.#> 'avalue' automatically set to 1.8275e-01

Alternatively, you can set the \(\alpha\) parameter to the value of your choice via the avalue

argument:

In the previous examples, we focused on \(\alpha\)-shapes in chromaticity diagrams since it is the most common space where convex hulls (that \(\alpha\)-shapes aim at replacing) are used. But it is also possible to use \(\alpha\)-shapes in other spaces, such as perceptually uniform (noise-corrected) spaces.

Let’s first build this uniform space and look at the data points in this space:

cd_flowers <- coldist(vis_flowers)#> Quantum catch are relative, distances may not be meaningful#> Calculating noise-weighted Euclidean distances

High-level functions to build the \(\alpha\)-shape directly in pavo have not yet

been implemented but you can use the alphashape3d package directly to compute

the \(\alpha\)-shapes, its volume and display it in a 3D interactive plot.

library(alphashape3d)#> Loading required package: geometry#> Loading required package: rgl

ashape_jnd <- ashape3d(as.matrix(xy_flowers), alpha = 10)

volume_ashape3d(ashape_jnd)#> [1] 748.863

plot(ashape_jnd)#> Device 1 : alpha = 10\(\alpha\)-shapes can also be used to measure the colour similarity of two objects,

by computing the colour volume overlap. This is done with the type = "alpha" argument

in voloverlap(). For example, let’s compare ibce agaub the colour volume of the

crown and the breast of stripe-tailed yellow finches (Sicalis citrina),

but this time with \(\alpha\)-shapes:

data(sicalis)

tcs.sicalis.C <- subset(colspace(vismodel(sicalis)), "C")

tcs.sicalis.B <- subset(colspace(vismodel(sicalis)), "B")

voloverlap(tcs.sicalis.C,

tcs.sicalis.B,

type = "alpha",

plot = TRUE

)#> 'avalue' automatically set to 2.4445e-02#> 'avalue' automatically set to 2.6255e-01#> Warning: interactive = FALSE has not been implemented yet,

#> falling back tointeractive plot.#> vol1 vol2 s_in1 s_in2 s_inboth s_ineither

#> 1 1.849436e-06 4.586381e-06 2 4 0 6

#> psmallest pboth

#> 1 0 0Summary variables for groups of points

Another advantage of colourspace models is that they allow for the calculation of useful summary statistics of groups of points, such as the centroid of the points, the total volume occupied (as above), the mean and variance of hue span and the mean saturation. In pavo, the result of a colspace() call is an object of class colspace, and thus these summary statistics can be calculated simply by calling summary. Note that the summary call can also take a by vector of group identities, so that the variables are calculated for each group separately:

summary(tetra.flowers)#> Colorspace & visual model options:

#> * Colorspace: tcs

#> * Quantal catch: fi

#> * Visual system, chromatic: bluetit

#> * Visual system, achromatic: none

#> * Illuminant: ideal, scale = 10000 (von Kries colour correction not applied)

#> * Background: ideal

#> * Relative: TRUE

#> * Max possible chromatic volume: NA#> 'avalue' automatically set to 1.9861e-01#> centroid.u centroid.s centroid.m centroid.l c.vol

#> all.points 0.1948478 0.2554421 0.2704411 0.279269 0.0002510915

#> rel.c.vol colspan.m colspan.v huedisp.m huedisp.v

#> all.points 0.001159742 0.05706825 0.001682224 0.6413439 0.3157949

#> mean.ra max.ra a.vol

#> all.points 0.2428508 0.8285792 0.0001723672It is important to highlight that oftentimes, the properties of the visual system and/or illuminant will make it impossible to reach the entirety of a given colourspace. You can view the maximum gamut of a given viewer by switching the gamut argument to TRUE in plot.colspace():

vis.flowers <- vismodel(flowers, visual = "ctenophorus")

tri.flowers <- colspace(vis.flowers)

plot(tri.flowers, pch = 21, bg = spec2rgb(flowers), gamut = TRUE)

This information is also available in the summary, in the “Max possible chromatic volume” line:

summary(tri.flowers)#> Colorspace & visual model options:

#> * Colorspace: trispace

#> * Quantal catch: Qi

#> * Visual system, chromatic: ctenophorus

#> * Visual system, achromatic: none

#> * Illuminant: ideal, scale = 1 (von Kries colour correction not applied)

#> * Background: ideal

#> * Relative: TRUE

#> * Max possible chromatic volume: 0.4729301#> s m l

#> Min. :0.02084 Min. :0.2318 Min. :0.2066

#> 1st Qu.:0.18617 1st Qu.:0.3005 1st Qu.:0.3806

#> Median :0.23206 Median :0.3452 Median :0.4180

#> Mean :0.23206 Mean :0.3225 Mean :0.4454

#> 3rd Qu.:0.29330 3rd Qu.:0.3513 3rd Qu.:0.5039

#> Max. :0.44720 Max. :0.3660 Max. :0.7474

#> x y h.theta

#> Min. :-0.09877 Min. :-0.38273 Min. :-1.9491

#> 1st Qu.: 0.02242 1st Qu.:-0.18023 1st Qu.:-1.2269

#> Median : 0.05899 Median :-0.12404 Median :-0.9590

#> Mean : 0.08689 Mean :-0.12404 Mean :-0.5767

#> 3rd Qu.: 0.12564 3rd Qu.:-0.04903 3rd Qu.:-0.7747

#> Max. : 0.36460 Max. : 0.13946 Max. : 2.6273

#> r.vec lum

#> Min. :0.02264 Mode:logical

#> 1st Qu.:0.08330 NA's:36

#> Median :0.13917

#> Mean :0.17858

#> 3rd Qu.:0.24002

#> Max. :0.528603.1.4.6.2 The Colour Hexagon

The hexagon colour space of Chittka (1992) is a generalised colour-opponent model of hymenopteran vision that has found extremely broad use, particularly in studies of bee-flower interactions. It’s also often broadly applied across hymenopteran species, because the photopigments underlying trichromatic vision in Hymenoptera appear to be quite conserved (Briscoe and Chittka 2001). What’s particularly useful is that colour distances within the hexagon have been extensively validated against behaviour, at least among honeybees, and thus offer a relatively reliable measure of perceptual distance.

In the hexagon, photoreceptor quantum catches are typically hyperbolically transformed (and pavo will return a warning if the transform is not selected), and vonkries correction is often used used to model photoreceptor adaptation to a vegetation background. This can all now be specified in vismodel(). including the optional use of a built-in ‘green’ vegetation background. Note that although this is a colourspace model, we specific relative = FALSE to return unnormalised quantum catches, as required for the model.

vis.flowers <- vismodel(flowers,

visual = "apis",

qcatch = "Ei",

relative = FALSE,

vonkries = TRUE,

achromatic = "l",

bkg = "green"

)We can then apply the hexagon model in colspace, which will convert our photoreceptor excitation values to coordinates in the hexagon according to:

\[ \begin{split} x &= \frac{\sqrt{3}}{2(E_g + E_{uv})}\\ y &= E_b - 0.5(E_{uv} + E_g) \end{split} \]

#> s m l x

#> Goodenia_heterophylla 0.56572097 0.8237141 0.7053057 0.1208839

#> Goodenia_geniculata 0.35761236 0.8176153 0.8670134 0.4411542

#> Goodenia_gracilis 0.01888788 0.1622766 0.7810589 0.6600594

#> Xyris_operculata 0.21080752 0.7345122 0.6796464 0.4060263

#> Eucalyptus_sp 0.55622758 0.8515289 0.8208038 0.2291297

#> Faradaya_splendida 0.45855056 0.7828905 0.8565895 0.3447118

#> y h.theta r.vec sec.fine

#> Goodenia_heterophylla 0.1882007 32.71320 0.2236793 30

#> Goodenia_geniculata 0.2053024 65.04388 0.4865862 60

#> Goodenia_gracilis -0.2376968 109.80467 0.7015541 100

#> Xyris_operculata 0.2892853 54.53092 0.4985412 50

#> Eucalyptus_sp 0.1630132 54.57024 0.2812005 50

#> Faradaya_splendida 0.1253205 70.02119 0.3667853 70

#> sec.coarse lum

#> Goodenia_heterophylla bluegreen 0.1680153

#> Goodenia_geniculata bluegreen 0.3548825

#> Goodenia_gracilis green 0.2313678

#> Xyris_operculata bluegreen 0.1518319

#> Eucalyptus_sp bluegreen 0.2787543

#> Faradaya_splendida bluegreen 0.3351008Again, the output includes the photoreceptor excitation values for short- medium- and long-wave sensitive photoreceptors, x and y coordinates, and measures of hue and saturation for each stimulus. The hexagon model also outputs two additional measures of of subjective ‘bee-hue’; sec.fine and sec.coarse. sec.fine describes the location of stimuli within one of 36 ‘hue sectors’ that are specified by radially dissecting the hexagon in 10-degree increments. sec.coarse follows a similar principle, though here the hexagon is divided into only five ‘bee-hue’ sectors: UV, UV-blue, blue, blue-green, green, and UV-green. These can easily be visualised by specifying sectors = 'coarse or sectors = 'fine' in a call to plot after modelling.

Figure 3.12: Flowers as modelled in the hymenopteran colour hexagon of Chittka (1992), overlain with coarse bee-hue sectors.

Note that ‘green receptor contrast’ (as per Spaethe (2001) and much subsequent work) in the hexagon model is often of interest, given its role in mediating the detection of stimuli at small visual angles. This is (by necessity) not the value returned as lum in the output of vismodel(). Instead, ‘green contrast’ is simply the long-wavelength receptor excitation values returned (l), when both the long wavelength receptor is specified as the ‘achromatic’ receptor and the vonkries transform is applied (as above). In the literature, green contrast may be expressed as an absolute excitation value ranging 0-1, with 0.5 representing unity (i.e. no contrast), or as the relative deviation from 0.5.

3.1.4.6.3 The Colour Opponent Coding (COC) Space

The colour opponent coding (coc) space is an earlier hymenopteran visual model (Backhaus 1991) which, although now seldom used in favour of the hexagon, may prove useful for comparative work. While the initial estimation of photoreceptor excitation is similar to that in the hexagon, the coc subsequently specifies A and B coordinates based on empirically-derived weights for the output from each photoreceptor:

\[ \begin{split} A &= -9.86E_u + 7.70E_b + 2.16E_g\\ B &= -5.17E_u + 20.25E_b - 15.08E_g \end{split} \]

Where \(E_i\) is the excitation value (hyperbolic-transformed quantum catch) in photoreceptor \(i\).

vis.flowers <- vismodel(flowers,

visual = "apis",

qcatch = "Ei",

relative = FALSE,

vonkries = TRUE,

bkg = "green"

)

coc.flowers <- colspace(vis.flowers, space = "coc")

head(coc.flowers)#> s m l x

#> Goodenia_heterophylla 0.56572097 0.8237141 0.7053057 2.288050

#> Goodenia_geniculata 0.35761236 0.8176153 0.8670134 4.642329

#> Goodenia_gracilis 0.01888788 0.1622766 0.7810589 2.750382

#> Xyris_operculata 0.21080752 0.7345122 0.6796464 5.045218

#> Eucalyptus_sp 0.55622758 0.8515289 0.8208038 2.845304

#> Faradaya_splendida 0.45855056 0.7828905 0.8565895 3.357182

#> y r.vec lum

#> Goodenia_heterophylla 3.1194224 5.407473 NA

#> Goodenia_geniculata 1.6332926 6.275622 NA

#> Goodenia_gracilis -8.5899171 11.340299 NA

#> Xyris_operculata 3.5349309 8.580149 NA

#> Eucalyptus_sp 1.9900420 4.835346 NA

#> Faradaya_splendida 0.5654568 3.922638 NAThe A and B coordinates are designated x and y in the output of coc for consistency, and while the model includes a measure of saturation in r.vec, it contains no associated measure of hue.

Figure 3.13: Flowers in the colour-opponent-coding space of Backhaus (1991), as modelling according to the honeybee.

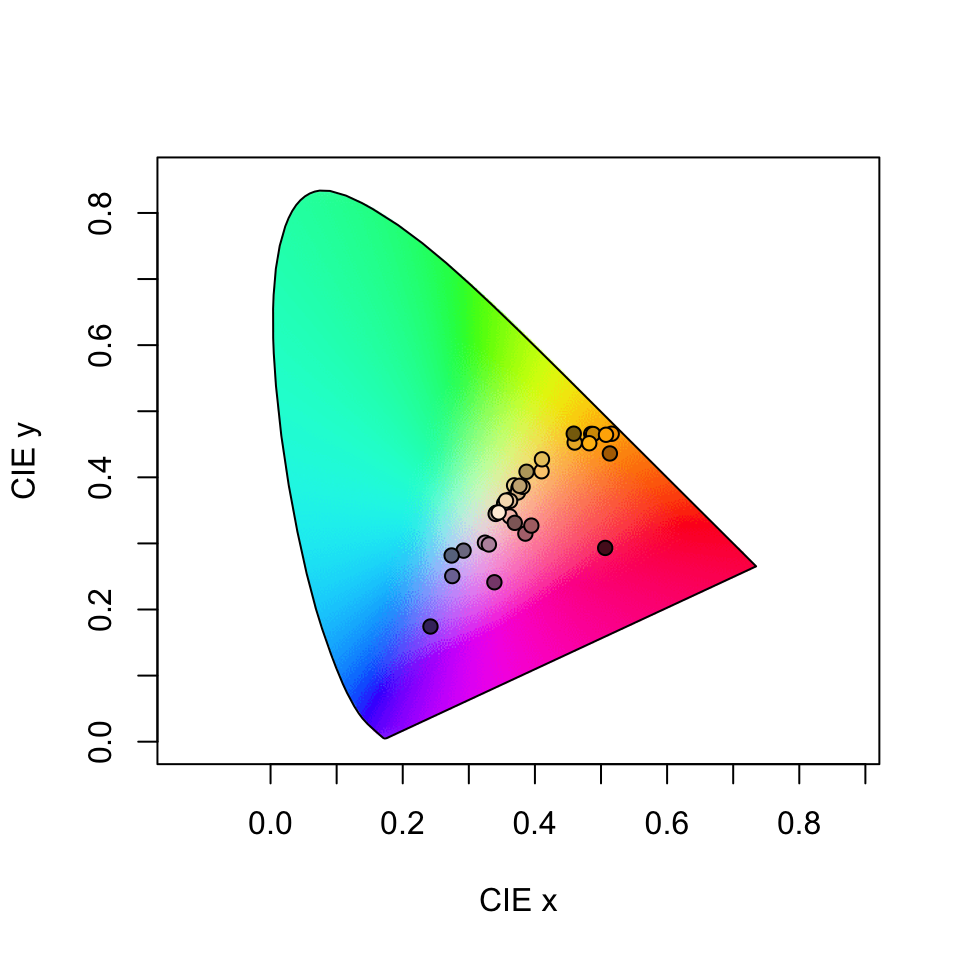

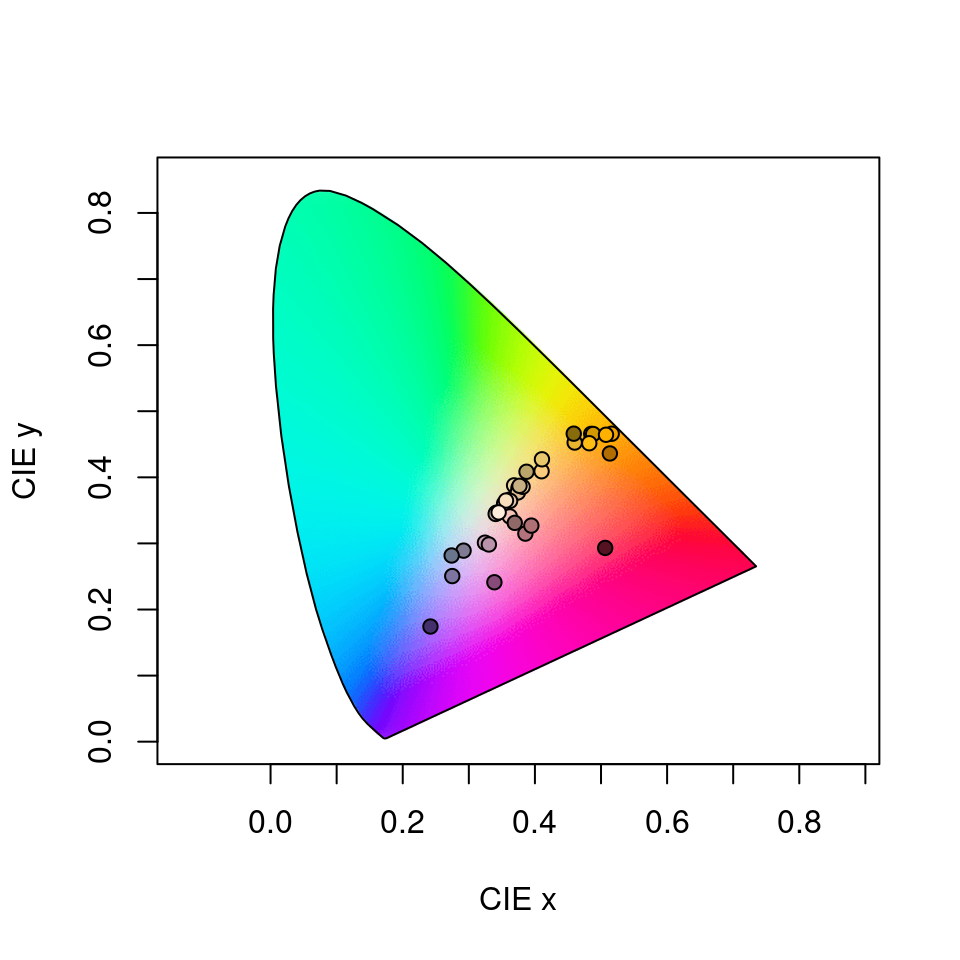

3.1.4.6.4 CIE Spaces

The CIE (International Commission on Illumination) colourspaces are a suite of models of human colour vision and perception. pavo 1.0 now includes two of the most commonly used: the foundational 1931 CIE XYZ space, and the more modern, perceptually calibrated CIE LAB space and its cylindrical CIE LCh transformation. Tristimulus values in XYZ space are calculated as:

\[ \begin{split} X &= k\int_{300}^{700}{R(\lambda)I(\lambda)\bar{x}(\lambda)\,d\lambda}\\ Y &= k\int_{300}^{700}{R(\lambda)I(\lambda)\bar{y}(\lambda)\,d\lambda}\\ Z &= k\int_{300}^{700}{R(\lambda)I(\lambda)\bar{z}(\lambda)\,d\lambda} \end{split} \]

where x, y, and z are the trichromatic colour matching functions for a ‘standard colourimetric viewer’. These functions are designed to describe an average human’s chromatic response within a specified viewing arc in the fovea (to account for the uneven distribution of cones across eye). pavo includes both the CIE 2-degree and the modern 10-degree standard observer, which can be selected in the visual option in the vismodel function. In these equations, k is the normalising factor

\[k = \frac{100}{\int_{300}^{700}{I(\lambda)\bar{y}(\lambda)\,d\lambda}}\]

and the chromaticity coordinates of stimuli are calculated as

\[ \begin{split} x &= \frac{X}{X + Y + Z}\\ y &= \frac{Y}{X + Y + Z}\\ z &= \frac{Z}{X + Y + Z} = 1 - x - y \end{split} \]

For modelling in both XYZ and LAB spaces, here we’ll use the CIE 10-degree standard observer, and assume a D65 ‘standard daylight’ illuminant. Again, although they are colourspace models, we need to set relative = FALSE to return raw quantum catch estimates, vonkries = TRUE to account for the required normalising factor (as above), and achromatic = 'none' since there is no applicable way to estimate luminance in the CIE models. As with all models that have particular requirements, vismodel() will output warnings and/or errors if unusual or non-standard arguments are specified.

vis.flowers <- vismodel(flowers,

visual = "cie10",

illum = "D65",

vonkries = TRUE,

relative = FALSE,

achromatic = "none"

)#> X Y Z x

#> Goodenia_heterophylla 0.2095846 0.2074402 0.30060475 0.2920513

#> Goodenia_geniculata 0.6415362 0.6751982 0.42423209 0.3684943

#> Goodenia_gracilis 0.5027874 0.4538738 0.01742707 0.5161620

#> Xyris_operculata 0.2847275 0.2325247 0.22131809 0.3855117

#> Eucalyptus_sp 0.4421178 0.4469747 0.40173521 0.3425072

#> Faradaya_splendida 0.6545883 0.6532237 0.28855528 0.4100487

#> y z

#> Goodenia_heterophylla 0.2890630 0.41888569

#> Goodenia_geniculata 0.3878295 0.24367620

#> Goodenia_gracilis 0.4659473 0.01789065

#> Xyris_operculata 0.3148309 0.29965743

#> Eucalyptus_sp 0.3462699 0.31122295

#> Faradaya_splendida 0.4091939 0.18075745The output is simply the tristimulus values and chromaticity coordinates of stimuli, and we can visualise our results (along with a line connecting monochromatic loci, by default) by calling

Figure 3.14: Floral reflectance in the CIEXYZ human visual model. Note that this space is not perceptually calibrated, so we cannot make inferences about the similarity or differences of colours based on their relative location.

The Lab space is a more recent development, and is a colour-opponent model that attempts to mimic the nonlinear responses of the human eye. The Lab space is also calibrated (unlike the XYZ space) such that Euclidean distances between points represent relative perceptual distances. This means that two stimuli that are farther apart in Lab space should be perceived as more different than two closer points. As the name suggests, the dimensions of the Lab space are Lightness (i.e. subjective brightness), along with two colour-opponent dimensions designated a and b. The colspace() function, when space = cielab, simply converts points from the XYZ model according to:

$$

where

\[ f(x)=\begin{cases} x^\frac{1}{3} & \text{if } x > 0.008856 \\ 7.787x + \frac{4}{29} & \text{if } x \leq 0.008856 \end{cases} \]

Here, \(X_n, Y_n, Z_n\) are neutral point values to model visual adaptation, calculated as:

\[ \begin{split} X_n &= \int_{300}^{700}{R_n(\lambda)I(\lambda)\bar{x}(\lambda)\,d\lambda}\\ Y_n &= \int_{300}^{700}{R_n(\lambda)I(\lambda)\bar{y}(\lambda)\,d\lambda}\\ Z_n &= \int_{300}^{700}{R_n(\lambda)I(\lambda)\bar{z}(\lambda)\,d\lambda} \end{split} \]

when \(R_n(\lambda)\) is a perfect diffuse reflector (i.e. 1).

#> X Y Z L

#> Goodenia_heterophylla 0.2095846 0.2074402 0.30060475 16.75107

#> Goodenia_geniculata 0.6415362 0.6751982 0.42423209 54.52316

#> Goodenia_gracilis 0.5027874 0.4538738 0.01742707 36.65092

#> Xyris_operculata 0.2847275 0.2325247 0.22131809 18.77668

#> Eucalyptus_sp 0.4421178 0.4469747 0.40173521 36.09381

#> Faradaya_splendida 0.6545883 0.6532237 0.28855528 52.74869

#> a b

#> Goodenia_heterophylla 3.110872 -18.616834

#> Goodenia_geniculata -4.478971 26.973109

#> Goodenia_gracilis 22.697331 60.437370

#> Xyris_operculata 21.382120 -2.596506

#> Eucalyptus_sp 3.297259 -1.246816

#> Faradaya_splendida 7.859790 45.351679Our output now contains the tristimulus XYZ values, as well as their Lab counterparts, which are coordinates in the Lab space. These can also be visualised in three-dimensional Lab space by calling plot:

Figure 3.15: Floral reflectance spectra represented in the CIELab model of human colour sensation.

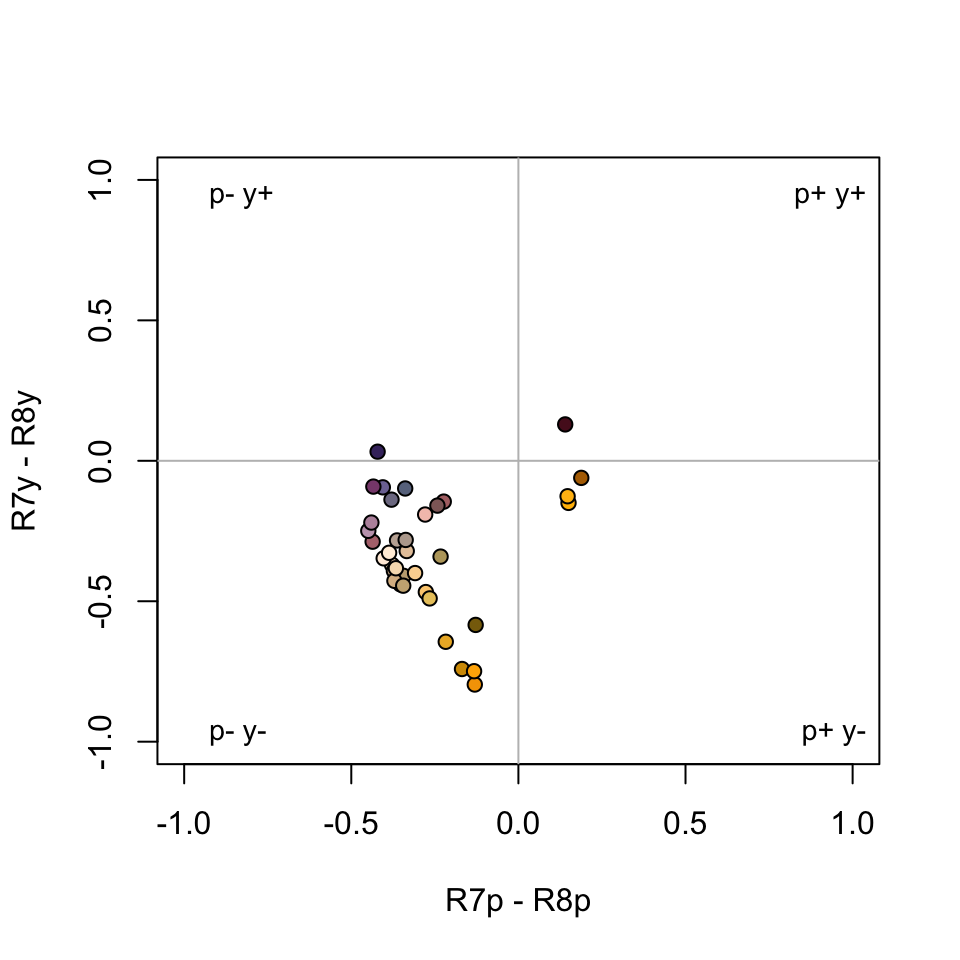

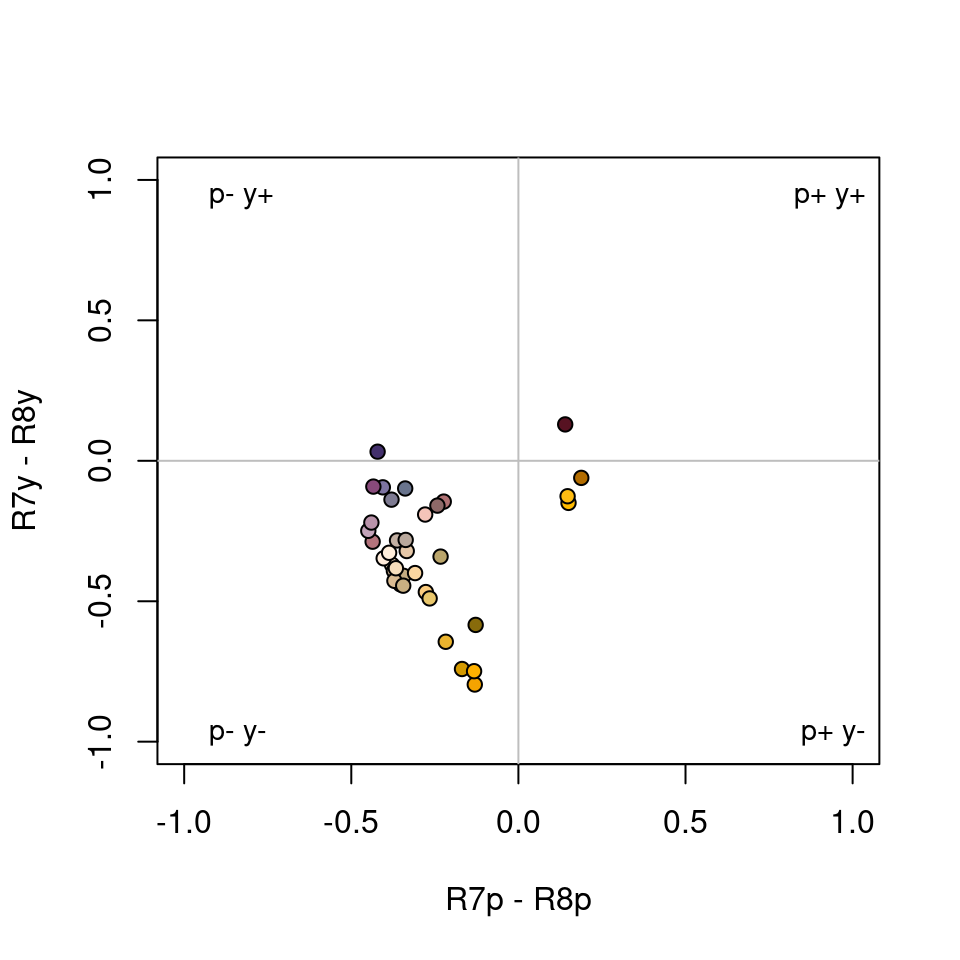

3.1.4.6.5 Categorical Fly Colourspace

The categorical colour vision model of Troje (1993) is a model of dipteran vision, based on behavioural data from the blowfly Lucilia sp. It assumes the involvement of all four dipteran photoreceptor classes (R7 & R8 ‘pale’ and ‘yellow’ subtypes), and further posits that colour vision is based on two specific opponent mechanisms (R7p - R8p, and R7y - R8y). The model assumes that all colours are perceptually grouped into one of four colour categories, and that flies are unable to distinguish between colours that fall within the same category.

We’ll use the visual systems of the muscoid fly Musca domestica, and will begin by estimating linear (i.e. untransformed) quantum catches for each of the four photoreceptors.

vis.flowers <- vismodel(flowers,

qcatch = "Qi",

visual = "musca",

achromatic = "none",

relative = TRUE

)Our call to colspace() will then simply estimate the location of stimuli in the categorical space as the difference in relative stimulation between ‘pale’ (R7p - R8p) and ‘yellow’ (R7y - R8y) photoreceptor pairs:

\[ \begin{split} x &= R7_p - R8_p\\ y &= R7_y - R8_y \end{split} \]

#> R7p R7y R8p R8y

#> Goodenia_heterophylla 0.05387616 0.18710662 0.4335986 0.3254187

#> Goodenia_geniculata 0.02080098 0.08276030 0.3737592 0.5226795

#> Goodenia_gracilis 0.01153130 0.02501417 0.1420049 0.8214496

#> Xyris_operculata 0.01270353 0.12523419 0.4488377 0.4132246

#> Eucalyptus_sp 0.03461949 0.14207848 0.3977208 0.4255812

#> Faradaya_splendida 0.03599953 0.09188560 0.3129195 0.5591953

#> x y category r.vec

#> Goodenia_heterophylla -0.3797224 -0.1383120 p-y- 0.4041279

#> Goodenia_geniculata -0.3529583 -0.4399192 p-y- 0.5640110

#> Goodenia_gracilis -0.1304736 -0.7964355 p-y- 0.8070519

#> Xyris_operculata -0.4361342 -0.2879904 p-y- 0.5226390

#> Eucalyptus_sp -0.3631013 -0.2835027 p-y- 0.4606695

#> Faradaya_splendida -0.2769200 -0.4673097 p-y- 0.5431971

#> h.theta lum

#> Goodenia_heterophylla -2.792284 NA

#> Goodenia_geniculata -2.246954 NA

#> Goodenia_gracilis -1.733176 NA

#> Xyris_operculata -2.557993 NA

#> Eucalyptus_sp -2.478681 NA

#> Faradaya_splendida -2.105745 NAAnd it is simply the signs of these differences that define the four possible fly-colour categories (p+y+, p-y+, p+y-, p-y-), which we can see in the associated plot.

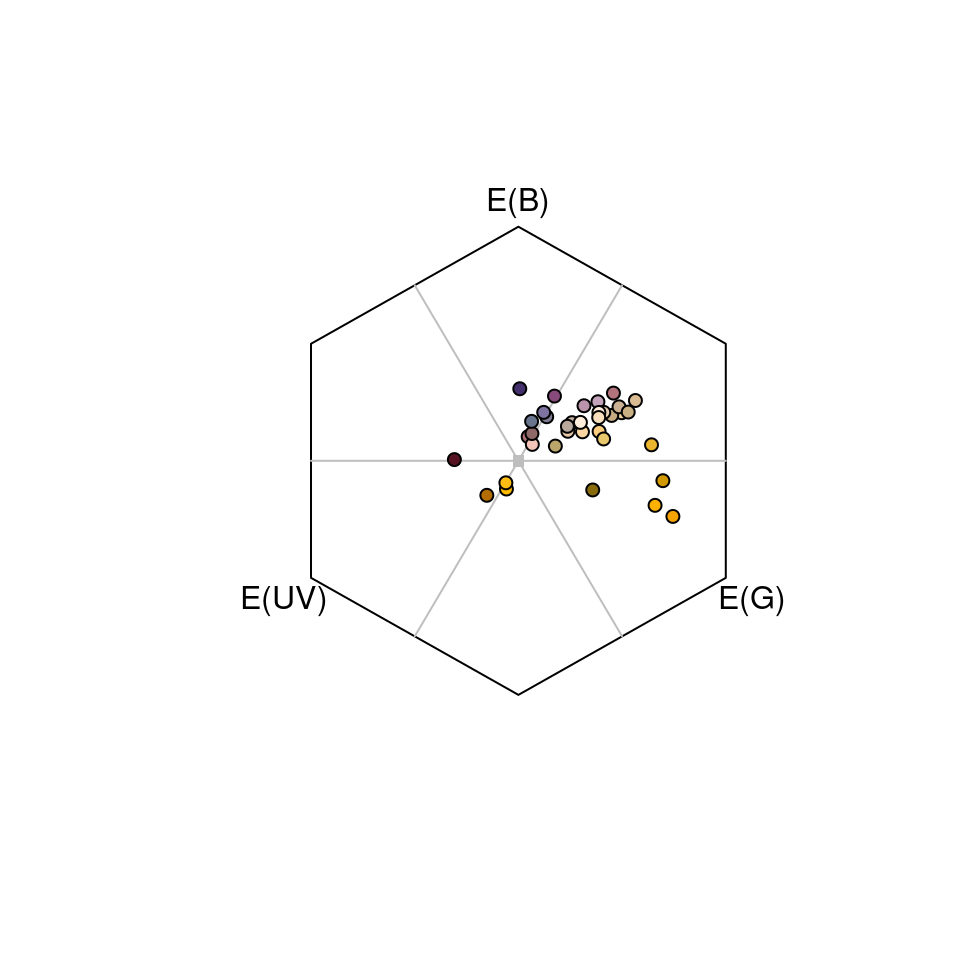

Figure 3.16: Flowers in the categorical colourspace of Troje (1993).

3.1.4.6.6 Segment Classification

The segment classification analysis (Endler 1990) does not assume any particular visual system, but instead tries to classify colours in a manner that captures common properties of many vertebrate (and some invertebrate) visual systems. In essence, it breaks down the reflectance spectrum region of interest into four equally-spaced regions, measuring the relative signal along those regions. This approximates a tetrachromatic system with ultraviolet, short, medium, and long-wavelength sensitive photoreceptors.

Though somewhat simplistic, this model captures many of the properties of other, more complex visual models, but without many of the additional assumptions these make. It also provides results in a fairly intuitive colour space, in which the angle corresponds to hue and the distance from the centre corresponds to chroma (Figure below; in fact, variables S5 and H4 from summary.rspec() are calculated from these relative segments).

Note that, while a segment analysis ranging from 300 or 400 nm to 700 nm corresponds quite closely to the human visual system colour wheel, any wavelength range can be analysed in this way, returning a 360° hue space delimited by the range used.

The segment differences or “opponents” are calculated as:

\[ \begin{split} LM &= \frac{ R_\lambda \sum_{\lambda={Q4}} R_\lambda - \sum_{\lambda={Q2}} R_\lambda }{\sum_{\lambda={min}}^{max}R_\lambda}\\ MS &= \frac{ R_\lambda \sum_{\lambda={Q3}} R_\lambda - \sum_{\lambda={Q1}} R_\lambda }{\sum_{\lambda={min}}^{max}R_\lambda} \end{split} \]

Where \(Qi\) represent the interquantile distances (e.g. \(Q1=ultraviolet\), \(Q2=blue\), \(Q3=green\) and \(Q4=red\))

The segment classification model is obtained through the colspace() argument space = 'segment', following initial modelling with vismodel(). The example below uses idealized reflectance spectra to illustrate how the avian colour space defined from the segment classification maps to the human colour wheel:

fakedata1 <- vapply(

seq(100, 500, by = 20),

function(x) {

rowSums(cbind(

dnorm(300:700, x, 30),

dnorm(300:700, x + 400, 30)

))

}, numeric(401)

)

# creating idealized specs with varying saturation

fakedata2 <- vapply(

c(500, 300, 150, 105, 75, 55, 40, 30),

function(x) dnorm(300:700, 550, x),

numeric(401)

)

fakedata1 <- as.rspec(data.frame(wl = 300:700, fakedata1))#> wavelengths found in column 1

fakedata1 <- procspec(fakedata1, "max")#> processing options applied:

#> Scaling spectra to a maximum value of 1

fakedata2 <- as.rspec(data.frame(wl = 300:700, fakedata2))#> wavelengths found in column 1

fakedata2 <- procspec(fakedata2, "sum")#> processing options applied:

#> Scaling spectra to a total area of 1

fakedata2 <- procspec(fakedata2, "min")#> processing options applied:

#> Scaling spectra to a minimum value of zero

# converting reflectance to percentage

fakedata1[, -1] <- fakedata1[, -1] * 100

fakedata2[, -1] <- fakedata2[, -1] / max(fakedata2[, -1]) * 100

# combining and converting to rspec

fakedata.c <- data.frame(

wl = 300:700,

fakedata1[, -1],

fakedata2[, -1]

)

fakedata.c <- as.rspec(fakedata.c)#> wavelengths found in column 1

# segment classification analysis

seg.vis <- vismodel(fakedata.c,

visual = "segment",

achromatic = "all"

)

seg.fdc <- colspace(seg.vis, space = "segment")

# plot results

plot(seg.fdc, col = spec2rgb(fakedata.c))

Figure 3.17: Idealized reflectance spectra and their projection on the axes of segment classification

3.1.4.6.7 Colourspace Distances with coldist

Under the colourspace framework, colour distances can be calculated simply as Euclidean distances of the relative cone stimulation data, either log-transformed or not, depending on how it was defined. Euclidean distances can be computed in R using the coldist() function on the colspace() output:

#> Quantum catch are relative, distances may not be meaningful#> Calculating unweighted Euclidean distances#> patch1 patch2 dS dL

#> 1 Goodenia_heterophylla Goodenia_geniculata 0.06695922 NA

#> 2 Goodenia_heterophylla Goodenia_gracilis 0.20467411 NA

#> 3 Goodenia_heterophylla Xyris_operculata 0.05407934 NA

#> 4 Goodenia_heterophylla Eucalyptus_sp 0.01901724 NA

#> 5 Goodenia_heterophylla Faradaya_splendida 0.05027645 NA

#> 6 Goodenia_heterophylla Gaultheria_hispida 0.03897671 NASpecialised colourspace models such as the colour-hexagon, coc space, CIELab and CIELCh models have their own distance measures, which are returned be default when run through coldist(). Be sure to read the ?coldist documentation, and the original publications, to understand what is being returned. As an example, if we run the results of colour-hexagon modelling through coldist():

# Model flower colours according to a honeybee

vis.flowers <- vismodel(flowers,

visual = "apis",

qcatch = "Ei",

relative = FALSE,

vonkries = TRUE,

achromatic = "l",

bkg = "green"

)

hex.flowers <- colspace(vis.flowers, space = "hexagon")

# Estimate colour distances. No need to specify

# relative receptor densities, noise etc., which

# only apply in the case of receptor-noise modelling

dist.flowers <- coldist(hex.flowers)#> Calculating unweighted Euclidean distances

head(dist.flowers)#> patch1 patch2 dS dL

#> 1 Goodenia_heterophylla Goodenia_geniculata 0.3207265 NA

#> 2 Goodenia_heterophylla Goodenia_gracilis 0.6870945 NA

#> 3 Goodenia_heterophylla Xyris_operculata 0.3025298 NA

#> 4 Goodenia_heterophylla Eucalyptus_sp 0.1111375 NA

#> 5 Goodenia_heterophylla Faradaya_splendida 0.2324927 NA

#> 6 Goodenia_heterophylla Gaultheria_hispida 0.2280836 NAthe chromatic contrasts dS and achromatic contrasts dL are expressed as Euclidean distances in the hexagon, known as ‘hexagon units’ (Chittka 1992). If we had instead used the colour-opponent coding space the units would have been city-bloc distances, while in the CIELab model the distances would be derived from the CIE colour-distance formula (2000).

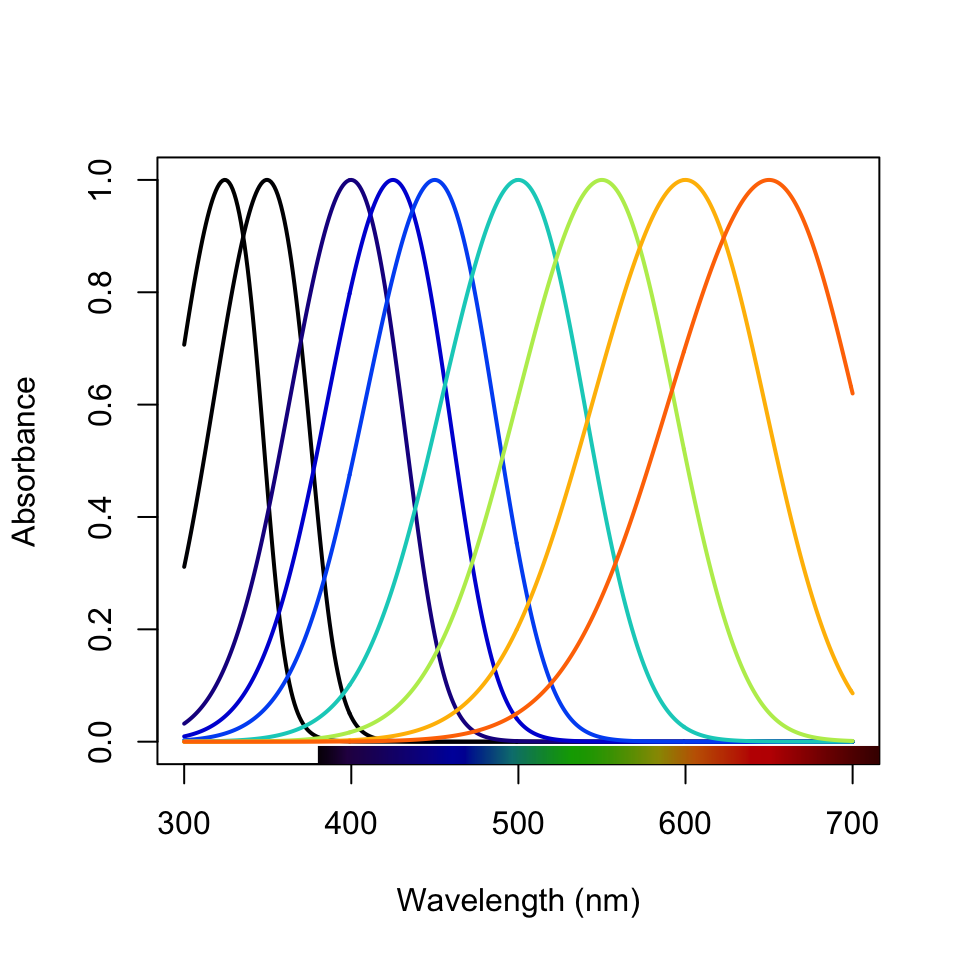

3.1.4.6.8 Distances in N-Dimensions

The coldist() function is no longer limited to di-, tri- or tetrachromatic visual systems. The function has been generalised, and can now calculate colour distances for n-dimensional visual phenotypes. This means there is no limit on the dimensionality of visual systems that may be input, which may prove useful for modelling nature’s extremes (e.g. butterflies, mantis shrimp) or for simulation-based work. Naturally, since these calculations aren’t largely implemented elsewhere, we recommend caution and validation of results prior to publication.

# Create an arbitrary visual phenotype with 10 photoreceptors

fakemantisshrimp <- sensmodel(

c(

325, 350, 400,

425, 450, 500,

550, 600, 650,

700

),

beta = FALSE, integrate = FALSE

)

# Convert to percentages, just to colour the plot

fakemantisshrimp.colours <- fakemantisshrimp * 100

fakemantisshrimp.colours[, "wl"] <- fakemantisshrimp[, "wl"]

plot(fakemantisshrimp,

col = spec2rgb(fakemantisshrimp.colours), lwd = 2,

ylab = "Absorbance"

)

Figure 3.18: Visual system of a pretend mantis shrimp with 10 cones

# Run visual model and calculate colour distances

vm.fms <- vismodel(flowers,

visual = fakemantisshrimp,

relative = FALSE, achromatic = FALSE

)

JND.fms <- coldist(vm.fms, n = c(1, 1, 2, 2, 3, 3, 4, 4, 5, 5))#> Calculating noise-weighted Euclidean distances

head(JND.fms)#> patch1 patch2 dS dL

#> 1 Goodenia_heterophylla Goodenia_geniculata 16.297557 NA

#> 2 Goodenia_heterophylla Goodenia_gracilis 48.390309 NA

#> 3 Goodenia_heterophylla Xyris_operculata 30.760482 NA

#> 4 Goodenia_heterophylla Eucalyptus_sp 6.044184 NA

#> 5 Goodenia_heterophylla Faradaya_splendida 15.901435 NA

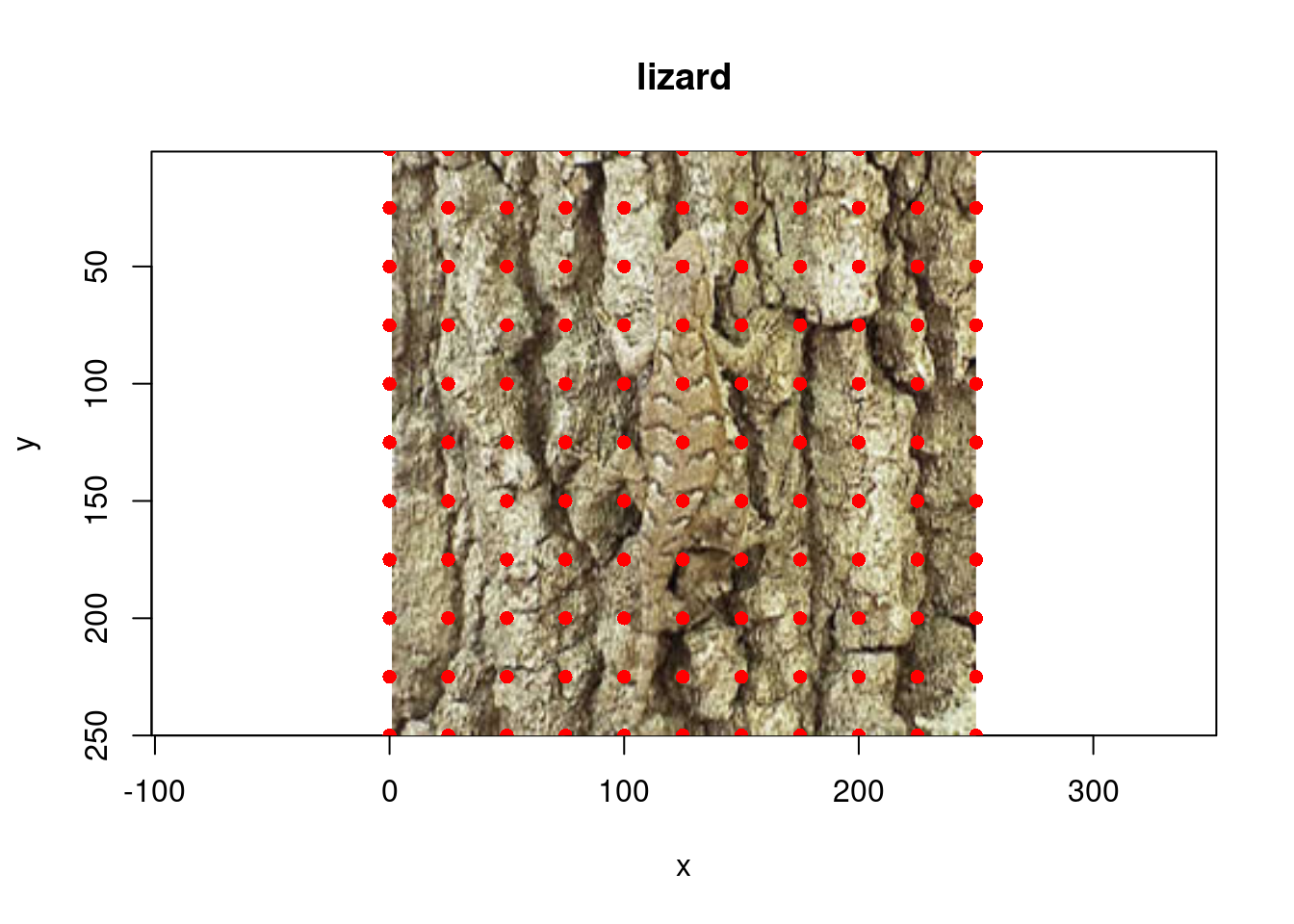

#> 6 Goodenia_heterophylla Gaultheria_hispida 11.188246 NA3.2 Analysing Spatial Data

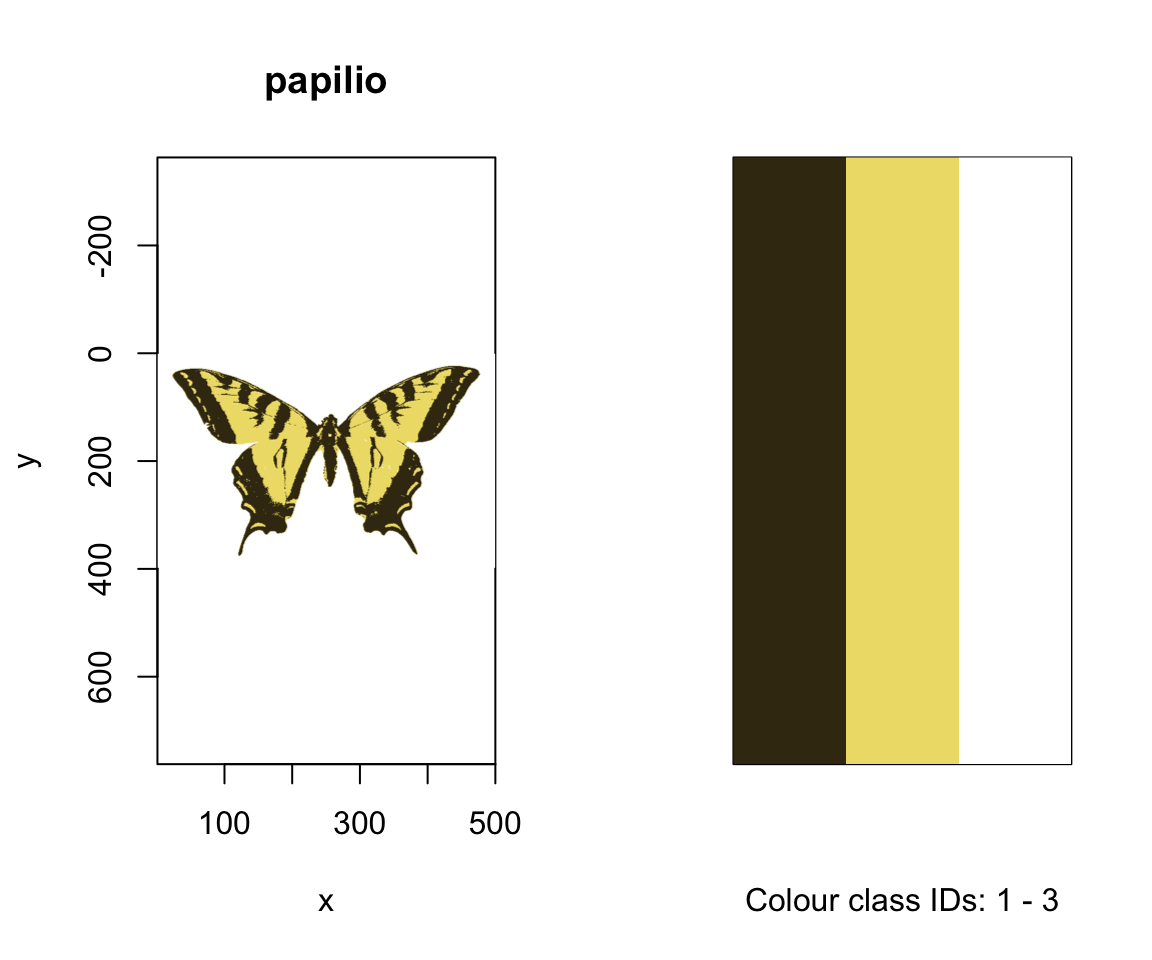

pavo now allows for the combined analysis of spectral data and spatial data. Note that ‘spatial’ data of course include images, but may also draw on spatially-sampled spectra, as explored below. Thus while much of the below deals with image-based processing and analysis, images are not necessary for many spatial analyses and indeed may perform worse than spectrally-based analyses in some cases. Further, image data should seldom be used without input from spectral measurements. Even in the simple butterfly example below, users should first validate the presence and number of discrete colours in a patch via spectral measurement and visual modelling (i.e. the ‘spectral’ information), which is then augmented by image-based clustering of these a priori identified patches to extract the ‘spatial’ information. Using uncalibrated images alone for inferences about colour pattern perception may well yield unreliable results, given the radical differences between human (camera) and non-human animal perception.

3.2.1 Image-based colour classification

We first import our images with getimg(), which functions in a similar manner to getspec(). Since we are importing more than one image, we can simply point the function to the folder containing the images, and it will use parallel processing (where possible) to import any jpg, bmp, or png images in the folder. As with the raw spectra, these images are also available at the package repository [here][data-location], but are included in the package installation for convenience.

butterflies <- getimg(system.file("testdata/images/butterflies", package = "pavo"))#> 2 files found; importing images.The segmentation of RGB images into discrete colour-classes is an useful step on the road to several analyses, and this is carried out in pavo via classify(). The function currently implements k-means clustering which, and offers some flexibility in the way in which it is carried out. In the simplest case, we can specify an image or image(s) as well as the number of discrete colours in each, and allow the k-means clustering algorithm to do its work. Note that the specification of k, the number of discrete colours in an image, is a crucial step to be estimated a priori beforehand, typically through the use of visual modelling to estimate the number of discriminable colours in an ‘objective’ manner. There are a suite of possible ways in which this may be achieved, though, with one detailed below as part of example 2.

Since we have two images, with (arguably) four and three colours each, including the white background, we can specify this information using kcols, before visualising the results with summary. The summary function, with plot = TRUE, is also particularly useful for understanding the colour-class labels, or ID’s, assigned to each colour, as well as visualising the accuracy of the classification process, which may be enhanced by specifying strongly-contrasting ‘false’ colours for plotting through the col argument.

#> Image classification in progress...

# Note that we could simply feed the list of

# images to summary, rather than specifying

# individual images, and they would progress